4 Introduction to Generalized Linear Models

Author: Grace Tompkins

Last Updated: August 5, 2022

4.1 Introduction

Although linear models have the potential to answer many research questions, we may be interested in finding the association between an outcome and a set of covariates where the outcome is not necessarily continuous or normally distributed. For example, a researcher may be interested in the relationship between the number of cavities and oral hygiene habits in adolescent patients, or perhaps a researcher is interested in identifying covariates that are related to food insecurity in rural populations. In these settings where we do not have a normally distributed outcome, linear regression model assumptions do not hold, and we cannot use them to analyze such data. Generalized linear models (GLMs) are an extension of linear regression models that allow us to use a variety of distributions for the outcome. In fact, linear regression is a special case of a GLM.

In this section, we will introduce the generalized linear model framework with an emphasis for model fitting in R.

4.2 List of R Packages

In this section, we will be using the packages catdata, MASS, AER, performance, faraway , and car. We can load these packages in R after installing them by the following:

4.3 Generalized Linear Model Framework

The generalized linear model is comprised of three components:

The Random Component: The distribution of the independently and identically distributed (i.i.d.) response variables are assumed to come from a parametric distribution that is a member of the exponential family. These include (but are not limited to) the binomial, Poisson, normal, exponential, and gamma distributions,

The Systematic Component: The linear combination of explanatory variables and regression parameters, and

The Link Function: The function that relates the mean of the distribution of \(Y_i\) to the linear predictor through

\[ g(\mu_i) = \beta_0 + \beta_1x_{i1} + \beta_2x_{i2} + ...+ \beta_px_{ip} \] where \(\mu_i = E[Y_i]\) is the mean of outcome and \(x_{i1}, ..., x_{ip}\) are the \(p\) covariates for individual/subject \(i\). We note that there is no error term on this model, unlike in the usual linear regression model. This is because we are modelling the mean of the outcome and thus a random error term is not needed.

4.3.1 Assumptions

We need to satisfy a number of assumptions to use the GLM framework:

- The outcome \(Y_i\) is independent between subjects and comes from a distribution that belongs to the exponential family,

- There is a linear relationship between a transformation of the mean and the predictors through the link function, and

- The errors are uncorrelated with constant variance, but not necessarily normally distributed.

We also assume that there is no multicollinearity among explanatory variables.

Multicollinearity (sometimes referred to as collinearity) occurs when two or more explanatory variables are highly correlated, such that they do not provide unique or independent information in the regression model. If the degree of correlation is high enough between variables, it can cause problems when fitting and interpreting the model. One way to detect multicollinearity is to calculate the variation inflation factor (VIF) for each covariate in a model. The general guideline is that a VIF larger than 10 indicates that the model has problems estimating the coefficients possibly due to multicollinearity. We will show in Section 4.6 how to use R functions to calculate the VIF after model building.

We note that our methodology will be particularly sensitive to these assumptions when sample sizes are small. When collecting data, we also want to ensure that the sample is representative of the population of interest to answer the research question(s).

4.3.2 Link Functions

Recall that we are modelling the mean of the outcome through a link function as in \[ g(\mu_i) = \beta_0 + \beta_1x_{i1} + \beta_2x_{i2} + ...+ \beta_px_{ip}. \] The link function will essentially transform a non-linear outcome to a linear outcome allowing us to fit a generalized linear model. For each distribution in the exponential family, there is a canonical link which is recommended to use as simplifies the process of finding maximum likelihood estimates in our model by ensuring that the mean of our outcome is mapped to \((-\infty, \infty)\) so we do not need to worry about constraints when optimizing. It also ensures \(\boldsymbol{x}^T\boldsymbol{y}\) is a sufficient statistic for \(\boldsymbol{\beta}\).

The following is a table containing commonly used distributions, their canonical links, and the corresponding name used in R:

| Distribution of \(Y\) | Canonical Link | Family parameter in R glm function |

|---|---|---|

| Normal (used for symmetric continuous data) | Identity: \(g(\mu) = \mu\) | family = gaussian(link = "identity") |

| Binomial (Binary data is a special case where n = 1) | Logistic: \(g(\mu) = \log\left(\frac{\mu}{n-\mu}\right)\) | family = binomial(link = "logit") |

| Poisson (used for discrete count data) | Log: \(g(\mu) = \log(\mu)\) | family = poisson(link = "log") |

| Gamma (used for continuous, positive, skewed or heteroskedastic data) | Reciprocal: \(g(\mu) = \frac{1}{\mu}\) | family = Gamma(link = "inverse") |

Each link function will dictate how we interpret the model parameters. For example, using a logistic link for binomial outcome data (also known as logistic regression), we interpret our parameters \(\beta\) as log odds ratios. More details on this can be found in the dedicated logistic regression module in Section 4.6. When using a log link on Poisson data, our model parameters estimate log relative rates, which we will detail in Section 4.8.

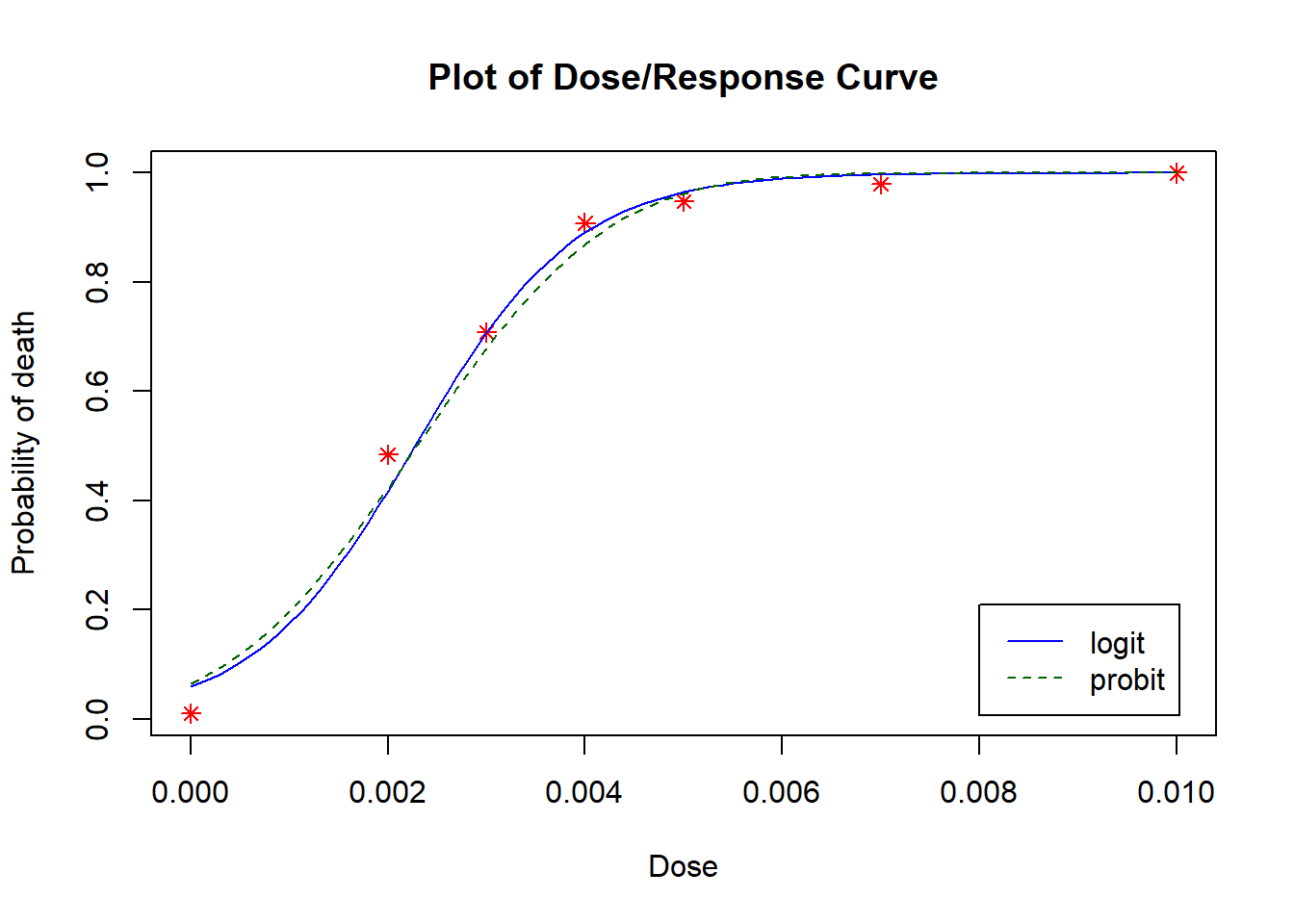

We note that sometimes we will use link functions other than the canonical link. For example, it is common for researchers evaluating dose-response relationships for binary outcomes to use the probit link instead of the canonical logistic link. The probit link is the inverse of the standard normal cumulative distribution function. We will also provide a detailed example for this in Section 4.7.2.

To fit a GLM in R, we use the glm() function. In this function, we can specify:

formula: a description of the model to be fit, similar to that in a regular linear model. For example, to fit a glm with outcomeyand covariatesx1andx2, we would writey ~ x1 + x2.family: a description of the error distribution and link function. That is, we specify the type of GLM to fit using the calls in the third column of the above table.data: the name of the data frameweights: (optional) a vector of column of weights.

We will show concrete examples of how to fit different types of GLMs in the following sections.

4.4 Notes on Model Selection

When fitting GLMs, it is important to perform model selection procedures and assess the model fit before interpreting the results. We aim to find a parsimonious model that addresses our research questions. That is, we aim to find the simplest model that explains the relationship between the outcome and covariate(s) of interest.

One of the most common ways to compare models against each other is through the likelihood ratio test (LRT). For the likelihood ratio test, we can compare nested models. That is, we can compare a full model to a nested model that contains a subset of variables that appear in the full model (and no other variables or transformations). Likelihood ratio tests tend to be the preferred method for building logistic regression models where we want to draw claims and perform hypothesis tests.

We typically start model selection with a full main-effects only model and look at removing the least significant covariates in the full model. We can see if these covariates can be removed in the model by testing the full model against one that does not contain those two covariates by a LRT. The LRT compares the residual deviance from both models we are comparing, with degrees of freedom (df) equal to the difference in the number of covariates between the two models. We are specifically testing the null hypothesis \(H_0\): the simpler model is adequate compared to the more complex model. That is, if the \(p\)-value from the test is small, we reject the null and conclude that the simpler model is not adequate, and we should use the fuller model. We can perform an LRT in R by using the anova() function with test = "LRT" in the function call.

For nested or non-nested models, we can also perform model selection by either Akaike information criterion (AIC) or Bayesian information criterion (BIC) where a smaller value represents a better fit. Although both AIC and BIC are similar, research has shown that each are appropriate for different tasks, as discussed here. For the problems discusses in the following sections, AIC and BIC are comparable and either are appropriate for use.

In the following sections, we will outline examples of fitting, assessing, and interpreting various of generalized linear models for commonly used distributions.

4.5 Normally Distributed Outcomes

A generalized linear model with a normally distributed outcome using the canonical link (identity) is the same as a linear regression model.

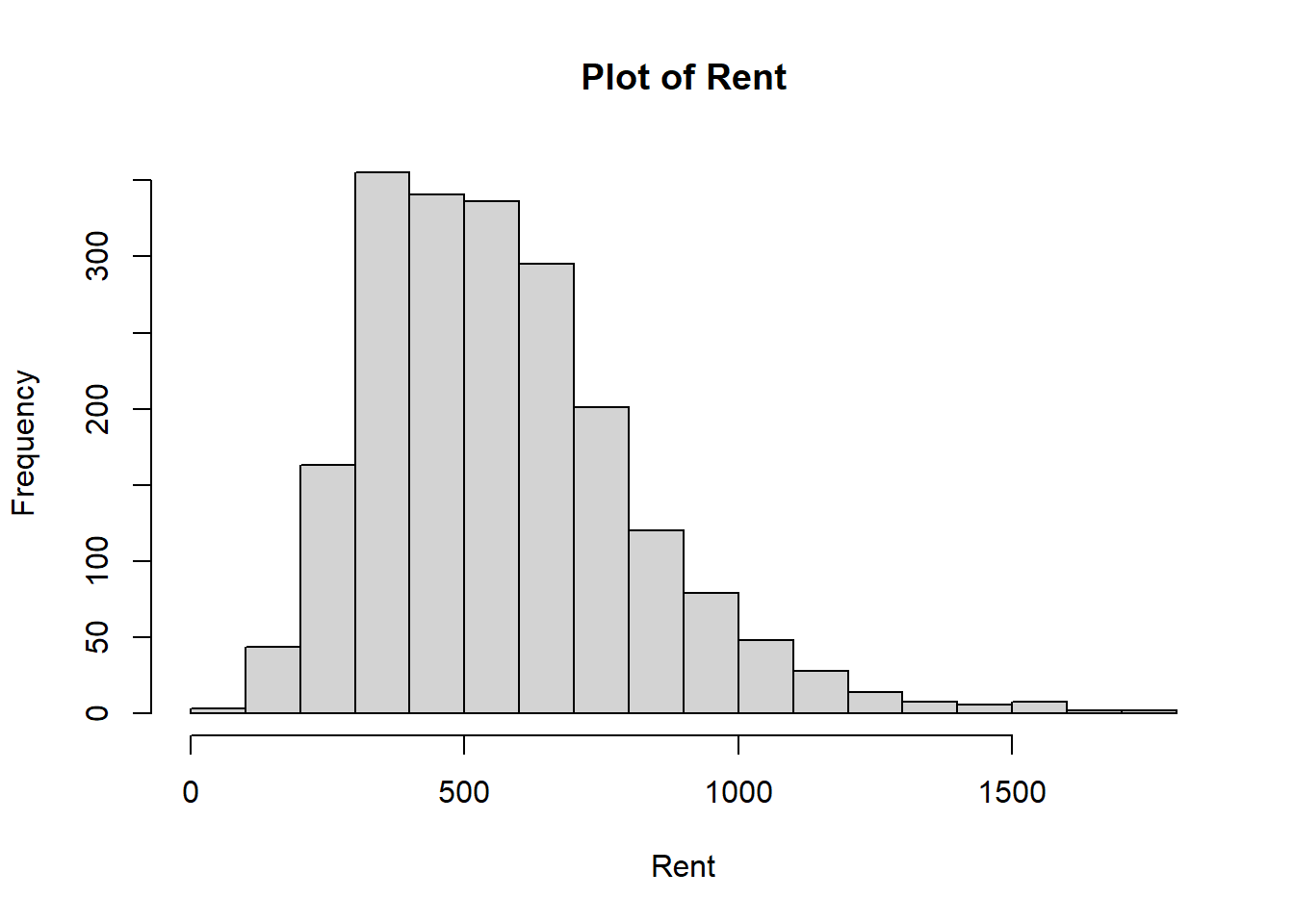

4.5.1 Example

We will be demonstrating the use of a glm on normally distributed data using the rent data set from the catdata package in R. This data set contains information on 2053 units’ rent (in euros), rent per squared meter (in euros), number of rooms, and other covariates for renal units in Munich in 2003. A full list and description of the variables can be found using by typing ?rent in the R console and reading through the documentation. We read in this data set with the following code, and look at the first 6 observations by;

## rent rentm size rooms year area good best warm central tiles

## 1 741.39 10.90 68 2 1918 2 1 0 0 0 0

## 2 715.82 11.01 65 2 1995 2 1 0 0 0 0

## 3 528.25 8.38 63 3 1918 2 1 0 0 0 0

## 4 553.99 8.52 65 3 1983 16 0 0 0 0 0

## 5 698.21 6.98 100 4 1995 16 1 0 0 0 0

## 6 935.65 11.55 81 4 1980 16 0 0 0 0 0

## bathextra kitchen

## 1 0 0

## 2 0 0

## 3 0 0

## 4 1 0

## 5 1 1

## 6 0 0We wish to see what main factors are related to rental prices, and use this model to predict the rental prices of units not included in the sample.

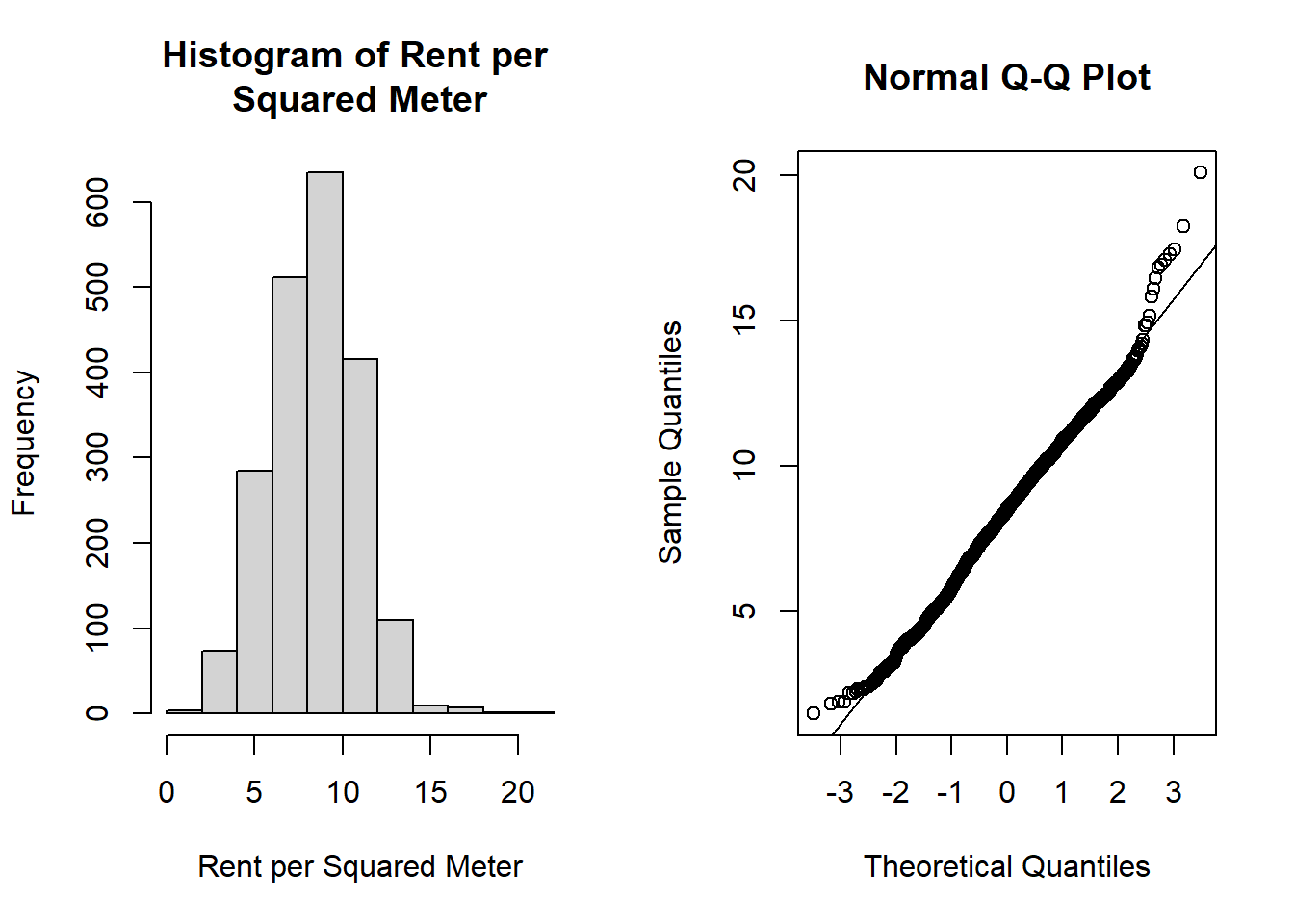

First, we need to assess the distribution of the outcome. We are going to use rentm, which is the clear rent per square meter in euros, as the outcome of interest. To assess the normality of the outcome, we can plot a histogram of the distribution and create a quantile-quantile (Q-Q) plot. To do so in R, we perform the following commands:

# plot two plots side by side

par(mfrow = c(1,2))

#plot the histogram

hist(rent$rentm, main = "Histogram of Rent per \nSquared Meter",

xlab = "Rent per Squared Meter")

#plot the qq plot, with reference line

qqnorm(rent$rentm)

qqline(rent$rentm)

Figure 4.1: Plots Used for Assessing Normality

From the histogram of rentm in Figure 4.1, we see that we have a fairly symmetric distribution. In the Q-Q plot, most points lie on the line, indicating that we have evidence to suggest the normality assumption is satisfied. That is, the normal distribution seems to be an appropriate distribution to assume for our outcome \(Y\) in our GLM.

Let’s fit a generalized linear model to this data set using the glm() function in R, recalling that some covariates are categorical and need to be assigned as a factor() while fitting the model:

# fit a full model

rentm_fit1 <- glm(rentm ~ size + factor(rooms) + year + factor(area) +

factor(good) + factor(best) + factor(warm) +

factor(central) + factor(tiles) + factor(bathextra) +

factor(kitchen), data = rent, family = gaussian(link = "identity"))

# see summary of model

summary(rentm_fit1)##

## Call:

## glm(formula = rentm ~ size + factor(rooms) + year + factor(area) +

## factor(good) + factor(best) + factor(warm) + factor(central) +

## factor(tiles) + factor(bathextra) + factor(kitchen), family = gaussian(link = "identity"),

## data = rent)

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -29.056738 4.353750 -6.674 3.21e-11 ***

## size -0.012989 0.003553 -3.656 0.000262 ***

## factor(rooms)2 -0.962336 0.168848 -5.699 1.38e-08 ***

## factor(rooms)3 -1.222089 0.211164 -5.787 8.27e-09 ***

## factor(rooms)4 -1.464680 0.286182 -5.118 3.38e-07 ***

## factor(rooms)5 -1.262989 0.445118 -2.837 0.004593 **

## factor(rooms)6 -1.348122 0.681699 -1.978 0.048111 *

## year 0.020691 0.002210 9.363 < 2e-16 ***

## factor(area)2 -0.579401 0.355480 -1.630 0.103277

## factor(area)3 -0.649166 0.366119 -1.773 0.076363 .

## factor(area)4 -0.930954 0.362002 -2.572 0.010192 *

## factor(area)5 -0.663130 0.360154 -1.841 0.065733 .

## factor(area)6 -1.104201 0.412643 -2.676 0.007513 **

## factor(area)7 -1.881931 0.411139 -4.577 5.00e-06 ***

## factor(area)8 -1.033445 0.419070 -2.466 0.013744 *

## factor(area)9 -1.070518 0.352590 -3.036 0.002427 **

## factor(area)10 -1.413725 0.426175 -3.317 0.000925 ***

## factor(area)11 -1.905430 0.413710 -4.606 4.37e-06 ***

## factor(area)12 -0.746397 0.392809 -1.900 0.057556 .

## factor(area)13 -1.063768 0.386219 -2.754 0.005934 **

## factor(area)14 -2.012162 0.422025 -4.768 2.00e-06 ***

## factor(area)15 -1.450753 0.453376 -3.200 0.001396 **

## factor(area)16 -2.097239 0.379343 -5.529 3.65e-08 ***

## factor(area)17 -1.656931 0.412166 -4.020 6.03e-05 ***

## factor(area)18 -1.031686 0.397764 -2.594 0.009563 **

## factor(area)19 -1.650084 0.381287 -4.328 1.58e-05 ***

## factor(area)20 -1.643962 0.433051 -3.796 0.000151 ***

## factor(area)21 -1.531664 0.422369 -3.626 0.000295 ***

## factor(area)22 -2.188120 0.534875 -4.091 4.47e-05 ***

## factor(area)23 -1.942936 0.641178 -3.030 0.002475 **

## factor(area)24 -2.111553 0.505552 -4.177 3.08e-05 ***

## factor(area)25 -1.779586 0.376323 -4.729 2.41e-06 ***

## factor(good)1 0.494288 0.115915 4.264 2.10e-05 ***

## factor(best)1 1.550206 0.323708 4.789 1.80e-06 ***

## factor(warm)1 -1.901138 0.291068 -6.532 8.22e-11 ***

## factor(central)1 -1.261988 0.199043 -6.340 2.82e-10 ***

## factor(tiles)1 -0.677180 0.118902 -5.695 1.41e-08 ***

## factor(bathextra)1 0.701636 0.165547 4.238 2.35e-05 ***

## factor(kitchen)1 1.388492 0.180393 7.697 2.17e-14 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for gaussian family taken to be 4.182963)

##

## Null deviance: 12486.0 on 2052 degrees of freedom

## Residual deviance: 8424.5 on 2014 degrees of freedom

## AIC: 8804.7

##

## Number of Fisher Scoring iterations: 2We note that we specifiedfamily = gaussian(link = "identity") in our model, but we could also have left this parameter out of the model as the default family is set to Gaussian for the glm() function, or fit the model using the lm() function.

All covariates appear to be significantly associated with the outcome rentm. As some of the levels of the area(municipality) covariate are insignificant, we can perform an LRT to see if the area covariate is needed. To do so, we fit a model without area and compare it to the model that includes area as a covariate using the anova() function:

# fit a model without area

rentm_fit2 <- glm(rentm ~ size + factor(rooms) + year +

factor(good) + factor(best) + factor(warm) +

factor(central) + factor(tiles) + factor(bathextra) +

factor(kitchen), data = rent, family = gaussian(link = "identity"))

# perform LRT

anova(rentm_fit2, rentm_fit1, test = "LRT")## Analysis of Deviance Table

##

## Model 1: rentm ~ size + factor(rooms) + year + factor(good) + factor(best) +

## factor(warm) + factor(central) + factor(tiles) + factor(bathextra) +

## factor(kitchen)

## Model 2: rentm ~ size + factor(rooms) + year + factor(area) + factor(good) +

## factor(best) + factor(warm) + factor(central) + factor(tiles) +

## factor(bathextra) + factor(kitchen)

## Resid. Df Resid. Dev Df Deviance Pr(>Chi)

## 1 2038 8845.0

## 2 2014 8424.5 24 420.51 2.441e-11 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1The null hypothesis we are testing is that the simpler model (model without area) is adequate compared to the fuller model. We have a small \(p\)-value here, indicating that we reject the null hypothesis and conclude that our model fit is better when we do include area as a covariate.

We can continue model building in this fashion, however as a GLM fitted with the identity link is the same as fitting a linear model, readers are directed to Section 3.3 for more information on model fitting, diagnostics, and interpretations.

4.6 Bernoulli Distributed Outcomes (Logistic Regression)

Logistic regression allows us to quantify the relationship between an outcome and other factors similar to traditional linear regression. However, traditional linear regression is limited to continuous outcomes and is thus not suitable for modelling binary outcomes. This is because the normality assumption of the outcome will be violated.

More specifically, logistic regression relates a binary outcome \(\bm{Y_i}\) to a set of covariates \(\bm{x_i}\) through the mean, as \[ g(\mu_i) = \bm{x}_i^T\bm{\beta} \] where \(Y_i\) is the binary outcome for individual \(i\), \(bm{x}_i\) is a \(p \times 1\) vector of covariates for individual \(i\), and \(g(\cdot)\) is a link function. For logistic regression, we use the logit link, meaning we express our model as

\[ \text{log} \left[\frac{P(Y_i=1|\bm{x}_i)}{P(Y_i=0|\bm{x}_i)} \right] = \text{log} \left[\frac{\pi_i}{1-\pi_i} \right]=\bm{x_i}^T\bm{\beta} \] That is, we model the natural logarithm of the ratio of the probability that \(Y_i\) is one versus zero. We denote the probability that \(Y_i\) is one given covariates \(\bm{x}_i\) as \(\pi_i\). We refer to the quantity \(\text{log} \left[\frac{\pi_i}{1-\pi_i} \right]\) as the log-odds (the logarithm of the odds) where the odds is the ratio of the probability an event occurring (\(P(Y_i = 1)\)) versus not occurring (\(P(Y_i = 0)\)). For example, in a roll of a standard six-sided die, the odds of rolling a three is \[ \text{Odds} = \frac{P(\text{3 is rolled})}{P(\text{3 is not rolled})} = \frac{(1/6)}{(5/6)} = 1/5 = 0.2 \] We emphasize that the odds are not equal to the probability of an event happening. The probability of rolling a three on a standard die is \(1/6 = 0.167\), which is not equal to the odds, however the two quantities are related by the definition of the odds.

Notice that in our model that there is no random error term. This may be surprising as we typically see a random error term in linear regression models, however because we are modelling the mean of the outcome a random error term is not needed.

Our primary interest is in estimating the coefficients \(\bm{\beta}\) in our model. These coefficients can be interpreted as log odds ratio of the outcome for a one unit change in the corresponding covariate. For example, if we consider a discrete covariate \(x_1\) representing disease presence, we would interpret \(\beta_1\) as the estimated log odds ratio of the outcome \(Y\) for those with the disease versus without, controlling for other covariates in the model. For continuous covariates such as age, we would interpret the coefficient as the estimated log odds ratio associated with a one year increase in age, controlling for other covariates. We will show examples of the model interpretation for real data examples in the following sections, with a focus on building, evaluating, and interpreting models in R.

4.6.1 Example

Let us look at an example using the heart data set from the catdata package in R. This data set contains a retrospective sample of 462 males between ages 15 and 64 in South Africa where the risk of heart disease is considered high. We have data on whether or not the subject has coronary heart disease (CHD) (y = 1 indicates subject has CHD), measurements of systolic blood pressure (sbp), cumulative tobacco use (tobacco), low density lipoprotein cholesterol (ldl), adiposity (adiposity), whether or not the subject has a family history of heart disease (famhist = 1 indicates family history), measures of type-A behavior (typea), a measure of obesity (obestiy), current alcohol consumption (alcohol), and the subject’s age (age).

data("heart", package = "catdata") # load the data set heart from catdata package

# here we specify package because there are other data sets named

# heart in other loaded packages

# convert to data frame

heart <- data.frame(heart)

# view first 6 rows of data

head(heart)## y sbp tobacco ldl adiposity famhist typea obesity alcohol age

## 1 1 160 12.00 5.73 23.11 1 49 25.30 97.20 52

## 2 1 144 0.01 4.41 28.61 0 55 28.87 2.06 63

## 3 0 118 0.08 3.48 32.28 1 52 29.14 3.81 46

## 4 1 170 7.50 6.41 38.03 1 51 31.99 24.26 58

## 5 1 134 13.60 3.50 27.78 1 60 25.99 57.34 49

## 6 0 132 6.20 6.47 36.21 1 62 30.77 14.14 45For illustrative purposes, we will convert age into a categorical variable to have a multi-level categorical variable in our analysis. We will convert our age into 10-year age categories: 1: 15 to 24, 2: 25 to 34, 3: 35 to 44, 4: 45 to 54, 5: 55 to 64. We emphasize that this decision is just to show readers how to work with categorical factors. We convert our variable into a factor using the following code:

#make a copy of age

heart$age_f <- heart$age

# overwrite it, making groups by age

heart$age_f[heart$age %in% 15:24] <- 1

heart$age_f[heart$age %in% 25:34] <- 2

heart$age_f[heart$age %in% 35:44] <- 3

heart$age_f[heart$age %in% 45:54] <- 4

heart$age_f[heart$age %in% 55:64] <- 5From this data set, we’d like to see if there is a relationship between CHD diagnosis and tobacco use. We wish to control for other factors in our analysis, which we can do using a logistic regression model.

Before building logistic regression models in R, we need to pre-process or “clean” the data. The first thing we can do is ensure the covariates in our data set are the correct type (continuous, categorical, etc) so that the model will be appropriately fit. The variables sbp, tobacco, adiposity, obesity, and alcohol, are continuous covariates and thus do not need to be specified as such. The variable famhist is a binary variable, and age.f is a categorical variable so these need to be specified as categorical variables or “factors” in R. We also need to convert the data set to a data frame. We do so with the following:

# specify categorical variables as factors

heart$famhist_f <- as.factor(heart$famhist)

heart$age_f <- as.factor(heart$age_f)Other things we may need to deal with in the data cleaning stage include missing data and duplicated responses. In our case, we do not have to deal with any of these issues and will continue with our analysis.

4.6.1.1 Model Fitting

To fit a logistic regression model in R, we fit a generalized linear model using the glm() function and specify a logistic link function by using the family=binomial(link = "logit")" argument. For example, we can build the main-effects only logistic regression model considering all covariates previously described by:

#build the logistic model

heart_modelmaineffects <- glm(y ~ sbp + tobacco + ldl + adiposity + famhist_f +

typea + obesity + alcohol + age_f,

family=binomial(link = "logit"), data=heart)

#show the output

summary(heart_modelmaineffects)##

## Call:

## glm(formula = y ~ sbp + tobacco + ldl + adiposity + famhist_f +

## typea + obesity + alcohol + age_f, family = binomial(link = "logit"),

## data = heart)

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) -6.166777 1.389558 -4.438 9.08e-06 ***

## sbp 0.007399 0.005745 1.288 0.197755

## tobacco 0.083147 0.026648 3.120 0.001807 **

## ldl 0.168207 0.059890 2.809 0.004976 **

## adiposity 0.027937 0.029331 0.952 0.340868

## famhist_f1 0.949461 0.229867 4.130 3.62e-05 ***

## typea 0.039052 0.012308 3.173 0.001510 **

## obesity -0.076045 0.045265 -1.680 0.092959 .

## alcohol -0.001241 0.004499 -0.276 0.782638

## age_f2 1.867250 0.792464 2.356 0.018460 *

## age_f3 1.899604 0.796106 2.386 0.017027 *

## age_f4 2.179759 0.809370 2.693 0.007078 **

## age_f5 2.710949 0.809071 3.351 0.000806 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 596.11 on 461 degrees of freedom

## Residual deviance: 468.28 on 449 degrees of freedom

## AIC: 494.28

##

## Number of Fisher Scoring iterations: 6Calling summary() on the model fit provides us with various estimates for the regression coefficients (“Estimate”), standard errors (“Std. Error”), and the associated Wald test statistic (“z value”) and \(p\)-value (“Pr(>|z|)”) for the null hypothesis that the corresponding coefficient is equal to zero.

While we could interpret this model and perform hypothesis tests, we must consider model selection to find the most appropriate model to answer our research questions.

We notice from the model output that alcohol, adiposity and sbp covariates have large \(p\)-values for the Wald test that \(\beta_{sbp} = 0\) and \(\beta_{adiposity} = 0\) and \(\beta_{alcohol} = 0\). We can see if these covariates are necessary in the model by testing the full model against one that does not contain those two covariates by a LRT. We do so in R by first fitting the new model, obtaining the residual deviance from both models we are comparing, and performing the LRT using the degrees of freedom (df) equal to the difference in the number of covariates between the two models (here, we removed 3 covariates and thus we have 3 degrees of freedom). The null hypothesis here is that heart_model2 is adequate compared to heart_modelfull.

# fit the nested model without sbp, alcohol, or adiposity

heart_model2 <- glm(y ~ tobacco + ldl + famhist_f + typea +

obesity + age_f,

family=binomial(link = "logit"), data=heart)

# perform the LRT

anova(heart_model2, heart_modelmaineffects, test = "LRT")## Analysis of Deviance Table

##

## Model 1: y ~ tobacco + ldl + famhist_f + typea + obesity + age_f

## Model 2: y ~ sbp + tobacco + ldl + adiposity + famhist_f + typea + obesity +

## alcohol + age_f

## Resid. Df Resid. Dev Df Deviance Pr(>Chi)

## 1 452 471.07

## 2 449 468.28 3 2.7856 0.4259We have a large \(p\)-value here, indicating that we do NOT reject the null hypothesis. That is, we are comfortable moving forward with the simpler model. Let’s take a look at the model summary:

##

## Call:

## glm(formula = y ~ tobacco + ldl + famhist_f + typea + obesity +

## age_f, family = binomial(link = "logit"), data = heart)

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) -5.56962 1.16980 -4.761 1.92e-06 ***

## tobacco 0.08307 0.02597 3.199 0.00138 **

## ldl 0.18393 0.05839 3.150 0.00163 **

## famhist_f1 0.93218 0.22816 4.086 4.40e-05 ***

## typea 0.03717 0.01218 3.052 0.00228 **

## obesity -0.03857 0.02976 -1.296 0.19491

## age_f2 1.88884 0.78761 2.398 0.01648 *

## age_f3 2.05322 0.78102 2.629 0.00857 **

## age_f4 2.42502 0.78318 3.096 0.00196 **

## age_f5 3.03253 0.77335 3.921 8.81e-05 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 596.11 on 461 degrees of freedom

## Residual deviance: 471.07 on 452 degrees of freedom

## AIC: 491.07

##

## Number of Fisher Scoring iterations: 6We notice that as we add and remove covariates, the estimates of our coefficients, standard errors, test statistics, and \(p\)-values will change. We see that obestiy does not appear to be significant in the model. We can see if this variable are necessary again by performing an LRT against our second model:

# fit the nested model without obesity

heart_model3 <- glm(y ~ tobacco + ldl + famhist_f + typea + age_f,

family=binomial(link = "logit"), data=heart)

# perform the LRT

anova(heart_model3, heart_model2, test = "LRT")## Analysis of Deviance Table

##

## Model 1: y ~ tobacco + ldl + famhist_f + typea + age_f

## Model 2: y ~ tobacco + ldl + famhist_f + typea + obesity + age_f

## Resid. Df Resid. Dev Df Deviance Pr(>Chi)

## 1 453 472.79

## 2 452 471.07 1 1.716 0.1902We have a moderate \(p\)-value of 0.1902 here, indicating that we do NOT reject the null hypothesis. That is, we are comfortable moving forward with the simpler model (heart_model3). Let’s take a look at the model summary:

##

## Call:

## glm(formula = y ~ tobacco + ldl + famhist_f + typea + age_f,

## family = binomial(link = "logit"), data = heart)

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) -6.31391 1.02645 -6.151 7.69e-10 ***

## tobacco 0.08394 0.02596 3.233 0.001224 **

## ldl 0.16274 0.05535 2.940 0.003280 **

## famhist_f1 0.92923 0.22777 4.080 4.51e-05 ***

## typea 0.03630 0.01216 2.986 0.002827 **

## age_f2 1.78017 0.78250 2.275 0.022908 *

## age_f3 1.93384 0.77520 2.495 0.012609 *

## age_f4 2.25988 0.77211 2.927 0.003424 **

## age_f5 2.91276 0.76700 3.798 0.000146 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 596.11 on 461 degrees of freedom

## Residual deviance: 472.79 on 453 degrees of freedom

## AIC: 490.79

##

## Number of Fisher Scoring iterations: 6All of the remaining covariates in our model are significantly significant. While we could stop the model selection procedure here, we should also consider interactions and higher-order terms in our model selection.

It is possible that those with a family history of heart disease may have different cholesterol levels than those who do not. We can consider adding in this interaction term to account for the potential difference in cholesterol levels by family history, and its impact on chronic heart disease diagnosis. We further fit another model with the interaction and perform an LRT against the model without the interaction by:

# fit the nested model with an interaction term

heart_model4 <- glm(y ~ tobacco + ldl + famhist_f + typea + age_f + ldl*famhist_f,

family=binomial(link = "logit"), data=heart)

#show the output

# perform the LRT

anova(heart_model3, heart_model4, test = "LRT")## Analysis of Deviance Table

##

## Model 1: y ~ tobacco + ldl + famhist_f + typea + age_f

## Model 2: y ~ tobacco + ldl + famhist_f + typea + age_f + ldl * famhist_f

## Resid. Df Resid. Dev Df Deviance Pr(>Chi)

## 1 453 472.79

## 2 452 463.46 1 9.3244 0.002261 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1We have a small \(p\)-value here, indicating that we reject the null hypothesis that the simpler model fits as well as the larger model. That is, we should include the interaction term in our model. Let’s look at the summary of this model:

##

## Call:

## glm(formula = y ~ tobacco + ldl + famhist_f + typea + age_f +

## ldl * famhist_f, family = binomial(link = "logit"), data = heart)

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) -5.61061 1.04614 -5.363 8.18e-08 ***

## tobacco 0.08901 0.02644 3.366 0.000762 ***

## ldl 0.01087 0.07413 0.147 0.883425

## famhist_f1 -0.81122 0.62828 -1.291 0.196643

## typea 0.03625 0.01243 2.917 0.003535 **

## age_f2 1.79383 0.78263 2.292 0.021902 *

## age_f3 1.95366 0.77616 2.517 0.011834 *

## age_f4 2.24868 0.77355 2.907 0.003650 **

## age_f5 2.96258 0.76746 3.860 0.000113 ***

## ldl:famhist_f1 0.34624 0.11725 2.953 0.003146 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 596.11 on 461 degrees of freedom

## Residual deviance: 463.46 on 452 degrees of freedom

## AIC: 483.46

##

## Number of Fisher Scoring iterations: 6We notice that the main effects of ldl and famhist_f are now insignificant. However, as we have the interaction term in the model, we tend to want to keep the main effects in the model as well to interpret. We note that there is a higher chance that the interaction will be significant if the main effects are as well.

We also note that for categorical variables, we do not test if individual levels of the covariate should be included. That is, if one level of age_f was not significant, we do not remove that one level. We would need to do an LRT to see if the entire covariate of age_f was necessary. We can do so by the following:

# fit the model without age_f

heart_model5 <- glm(y ~ tobacco + ldl + famhist_f + typea + ldl*famhist_f,

family=binomial(link = "logit"), data=heart)

# perform the LRT

anova(heart_model4, heart_model5, test = "LRT")## Analysis of Deviance Table

##

## Model 1: y ~ tobacco + ldl + famhist_f + typea + age_f + ldl * famhist_f

## Model 2: y ~ tobacco + ldl + famhist_f + typea + ldl * famhist_f

## Resid. Df Resid. Dev Df Deviance Pr(>Chi)

## 1 452 463.46

## 2 456 494.11 -4 -30.651 3.606e-06 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1We have a very small \(p\)-value, indicating that we reject the null hypothesis that the simpler model is better. That is, age_f is necessary in our model and we should use heart_model4 as our final model!

We will compare the final model selected by LRTs to a built-in stepwise model selection procedure in R using the step() function. The step() function evaluates the model on the Akaike information criterion (AIC), where a smaller value represents a better fit. To do so in R, we use this function on the full model and perform a backward selection procedure by:

#fit a full model, including the interaction

heart_full <- glm(y ~ sbp + tobacco + ldl + adiposity + famhist_f +

typea + obesity + alcohol + age_f + ldl*famhist_f,

family=binomial(link = "logit"), data=heart)

step(heart_full, direction = c("backward"))## Start: AIC=487.47

## y ~ sbp + tobacco + ldl + adiposity + famhist_f + typea + obesity +

## alcohol + age_f + ldl * famhist_f

##

## Df Deviance AIC

## - alcohol 1 459.49 485.49

## - adiposity 1 460.12 486.12

## - sbp 1 460.93 486.93

## <none> 459.47 487.47

## - obesity 1 462.01 488.01

## - ldl:famhist_f 1 468.28 494.28

## - typea 1 469.41 495.41

## - tobacco 1 470.81 496.81

## - age_f 4 479.03 499.03

##

## Step: AIC=485.49

## y ~ sbp + tobacco + ldl + adiposity + famhist_f + typea + obesity +

## age_f + ldl:famhist_f

##

## Df Deviance AIC

## - adiposity 1 460.13 484.13

## - sbp 1 460.93 484.93

## <none> 459.49 485.49

## - obesity 1 462.02 486.02

## - ldl:famhist_f 1 468.36 492.36

## - typea 1 469.42 493.42

## - tobacco 1 471.01 495.01

## - age_f 4 479.08 497.08

##

## Step: AIC=484.13

## y ~ sbp + tobacco + ldl + famhist_f + typea + obesity + age_f +

## ldl:famhist_f

##

## Df Deviance AIC

## - sbp 1 461.74 483.74

## <none> 460.13 484.13

## - obesity 1 462.33 484.33

## - ldl:famhist_f 1 469.25 491.25

## - typea 1 469.70 491.70

## - tobacco 1 471.86 493.86

## - age_f 4 487.28 503.28

##

## Step: AIC=483.74

## y ~ tobacco + ldl + famhist_f + typea + obesity + age_f + ldl:famhist_f

##

## Df Deviance AIC

## - obesity 1 463.46 483.46

## <none> 461.74 483.74

## - typea 1 471.02 491.02

## - ldl:famhist_f 1 471.07 491.07

## - tobacco 1 473.92 493.92

## - age_f 4 493.79 507.79

##

## Step: AIC=483.46

## y ~ tobacco + ldl + famhist_f + typea + age_f + ldl:famhist_f

##

## Df Deviance AIC

## <none> 463.46 483.46

## - typea 1 472.38 490.38

## - ldl:famhist_f 1 472.79 490.79

## - tobacco 1 475.87 493.87

## - age_f 4 494.11 506.11##

## Call: glm(formula = y ~ tobacco + ldl + famhist_f + typea + age_f +

## ldl:famhist_f, family = binomial(link = "logit"), data = heart)

##

## Coefficients:

## (Intercept) tobacco ldl famhist_f1

## -5.61061 0.08901 0.01087 -0.81122

## typea age_f2 age_f3 age_f4

## 0.03625 1.79383 1.95366 2.24868

## age_f5 ldl:famhist_f1

## 2.96258 0.34624

##

## Degrees of Freedom: 461 Total (i.e. Null); 452 Residual

## Null Deviance: 596.1

## Residual Deviance: 463.5 AIC: 483.5The final model contains tobacco, ldl, famhist_f, typea, age_f, and the interaction between ldl and famhist_f as covariates. The model chosen by the step() function in this case is exactly the same as the one we obtained by the LRT. However, depending on the order we perform the likelihood ratio tests, or the direction of the stepwise algorithm, we can obtain different model results. Likelihood ratio tests tend to be preferred over AIC based algorithms for building logistic regression models where we want to draw claims and perform hypothesis tests while AIC based algorithms tend to be preferred for forecasting problems. As such, we will continue our analysis with the model chosen by our LRTs.

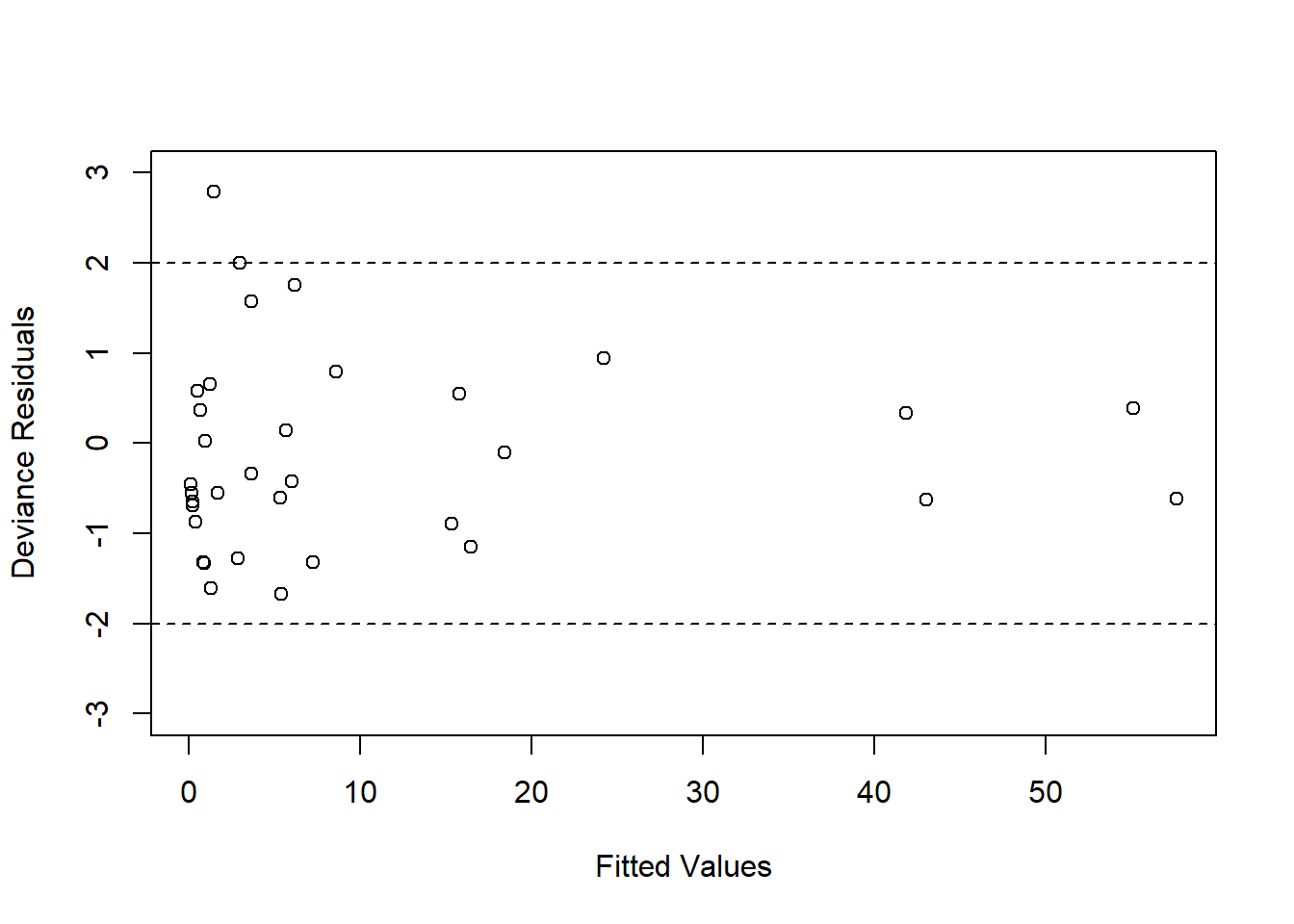

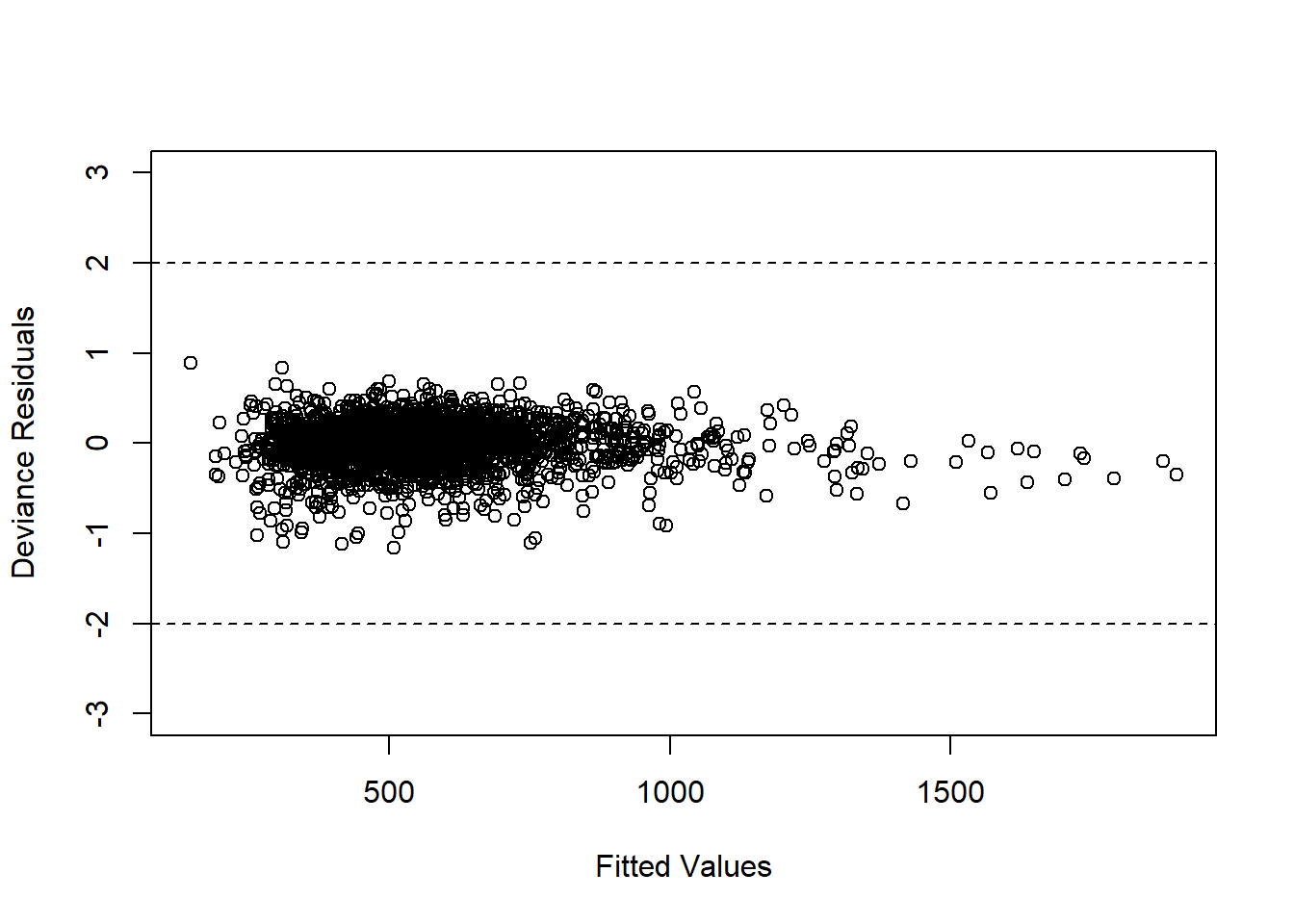

4.6.1.2 Model Diagnostics

Before interpreting the chosen model, we must assess the model fit. We can do so by plotting the deviance residuals to give an idea of the model fit. We can do so in R by:

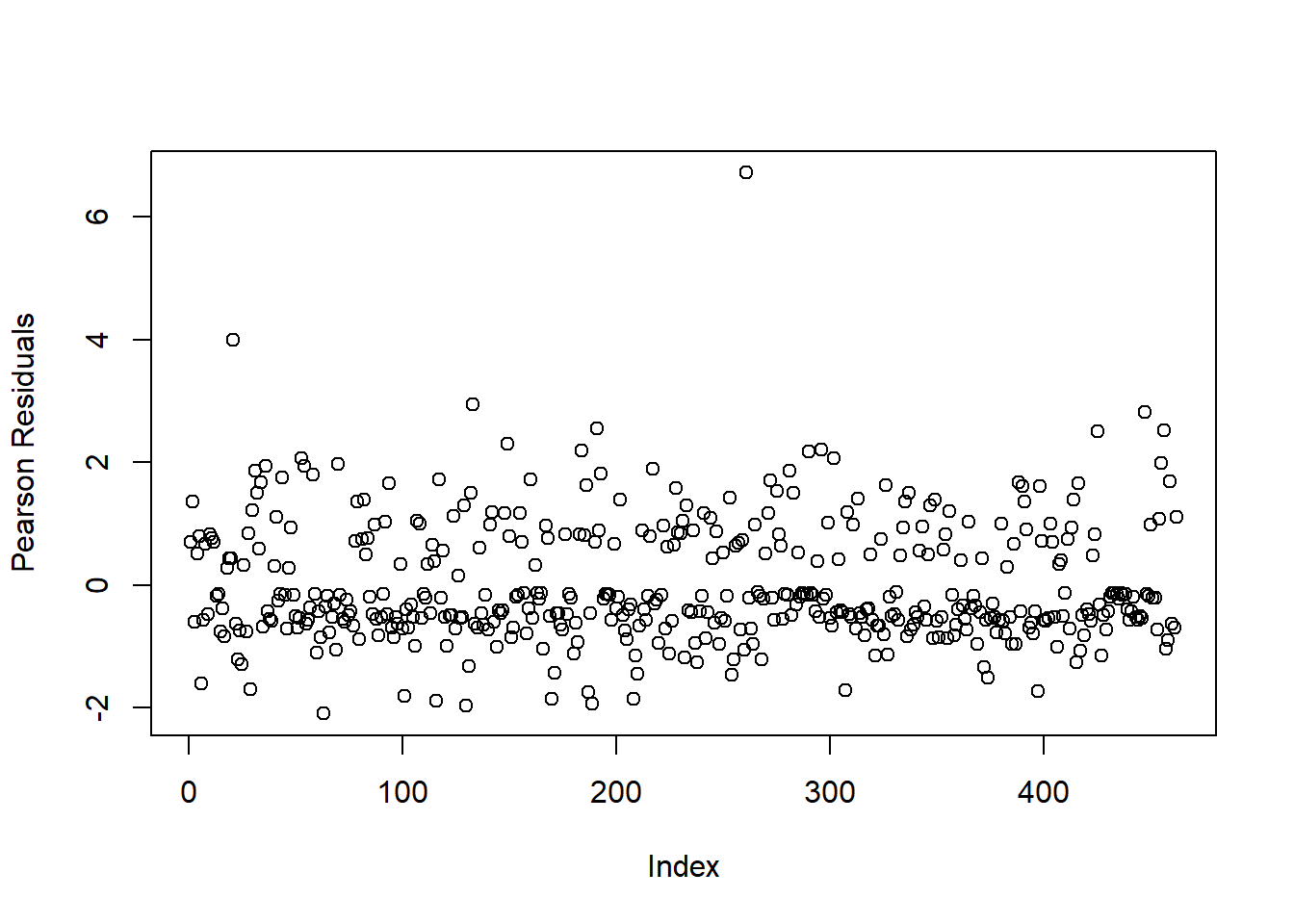

Figure 4.2: Plot of Residuals for Logistic Regression Model

We notice that there are two subjects with high residual values. To identify them, we can use the following code;

# sort residuals largest to smallest and select the first two

sort(residuals(heart_model4, type = "pearson"), decreasing = T)[1:2]## 261 21

## 6.714401 3.987004Subjects 261 and 21 have high deviance residuals. To see if these are influential observations, we can refit the logistic regression model without these observations. If the estimates of our model change greatly, then we should remove these two observations as they may affect inference and predictions made with the logistic regression model.

Let’s make a second data set without the 261st observation and see if the results of the model change.

heart2 <- heart[-261,] # removing the 261st observation

#fit the model using heart2

heart_model4_2 <- glm(y ~ tobacco + ldl + famhist_f + typea + age_f + ldl*famhist_f,

family=binomial(link = "logit"), data=heart2) #use heart2

summary(heart_model4_2) #model summary excluding id 261##

## Call:

## glm(formula = y ~ tobacco + ldl + famhist_f + typea + age_f +

## ldl * famhist_f, family = binomial(link = "logit"), data = heart2)

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) -6.41271 1.27032 -5.048 4.46e-07 ***

## tobacco 0.08917 0.02647 3.368 0.000757 ***

## ldl 0.01863 0.07452 0.250 0.802549

## famhist_f1 -0.74549 0.63251 -1.179 0.238552

## typea 0.03743 0.01254 2.985 0.002840 **

## age_f2 2.48866 1.05440 2.360 0.018262 *

## age_f3 2.64455 1.04953 2.520 0.011744 *

## age_f4 2.94108 1.04764 2.807 0.004995 **

## age_f5 3.65866 1.04328 3.507 0.000453 ***

## ldl:famhist_f1 0.33576 0.11769 2.853 0.004332 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 593.98 on 460 degrees of freedom

## Residual deviance: 455.17 on 451 degrees of freedom

## AIC: 475.17

##

## Number of Fisher Scoring iterations: 6##

## Call:

## glm(formula = y ~ tobacco + ldl + famhist_f + typea + age_f +

## ldl * famhist_f, family = binomial(link = "logit"), data = heart)

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) -5.61061 1.04614 -5.363 8.18e-08 ***

## tobacco 0.08901 0.02644 3.366 0.000762 ***

## ldl 0.01087 0.07413 0.147 0.883425

## famhist_f1 -0.81122 0.62828 -1.291 0.196643

## typea 0.03625 0.01243 2.917 0.003535 **

## age_f2 1.79383 0.78263 2.292 0.021902 *

## age_f3 1.95366 0.77616 2.517 0.011834 *

## age_f4 2.24868 0.77355 2.907 0.003650 **

## age_f5 2.96258 0.76746 3.860 0.000113 ***

## ldl:famhist_f1 0.34624 0.11725 2.953 0.003146 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 596.11 on 461 degrees of freedom

## Residual deviance: 463.46 on 452 degrees of freedom

## AIC: 483.46

##

## Number of Fisher Scoring iterations: 6We do see that the estimated coefficients and standard errors change after removing the observation. In particular, the estimated regression coefficients and standard errors of the age_f variable changed greatly. This shows that observation 261 is an influential observation, and should be removed.

We can do the same procedure for removing observation 21:

heart3 <- heart[-c(21, 261),] # removing the 21st (and 261st from prev removal)

# observation

#fit the model using heart3

heart_model4_3 <- glm(y ~ tobacco + ldl + famhist_f + typea + age_f + ldl*famhist_f,

family=binomial(link = "logit"), data=heart3) #use heart3

summary(heart_model4_3) #model summary excluding id 21 and id 261##

## Call:

## glm(formula = y ~ tobacco + ldl + famhist_f + typea + age_f +

## ldl * famhist_f, family = binomial(link = "logit"), data = heart3)

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) -20.65184 793.20342 -0.026 0.979229

## tobacco 0.08771 0.02635 3.328 0.000874 ***

## ldl 0.03073 0.07479 0.411 0.681168

## famhist_f1 -0.65602 0.63603 -1.031 0.302337

## typea 0.03471 0.01254 2.768 0.005642 **

## age_f2 16.82365 793.20311 0.021 0.983078

## age_f3 16.97712 793.20310 0.021 0.982924

## age_f4 17.26763 793.20310 0.022 0.982632

## age_f5 17.97553 793.20309 0.023 0.981920

## ldl:famhist_f1 0.32012 0.11794 2.714 0.006643 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 591.85 on 459 degrees of freedom

## Residual deviance: 446.13 on 450 degrees of freedom

## AIC: 466.13

##

## Number of Fisher Scoring iterations: 17##

## Call:

## glm(formula = y ~ tobacco + ldl + famhist_f + typea + age_f +

## ldl * famhist_f, family = binomial(link = "logit"), data = heart)

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) -5.61061 1.04614 -5.363 8.18e-08 ***

## tobacco 0.08901 0.02644 3.366 0.000762 ***

## ldl 0.01087 0.07413 0.147 0.883425

## famhist_f1 -0.81122 0.62828 -1.291 0.196643

## typea 0.03625 0.01243 2.917 0.003535 **

## age_f2 1.79383 0.78263 2.292 0.021902 *

## age_f3 1.95366 0.77616 2.517 0.011834 *

## age_f4 2.24868 0.77355 2.907 0.003650 **

## age_f5 2.96258 0.76746 3.860 0.000113 ***

## ldl:famhist_f1 0.34624 0.11725 2.953 0.003146 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 596.11 on 461 degrees of freedom

## Residual deviance: 463.46 on 452 degrees of freedom

## AIC: 483.46

##

## Number of Fisher Scoring iterations: 6Again we see that the coefficients change significantly and deem the 21st observation to be influential. Thus, we continue our analysis without observation 21 and 261.

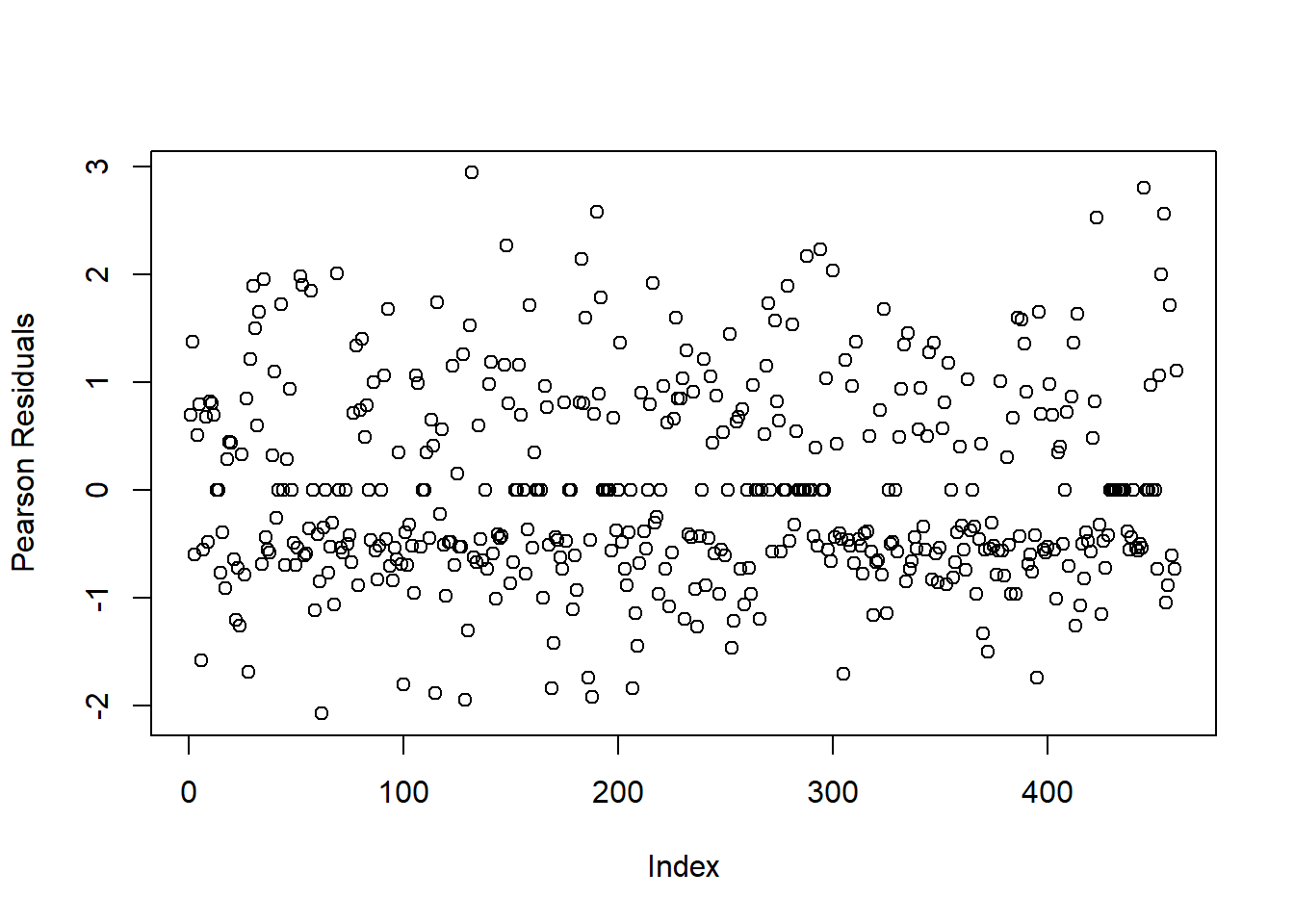

Figure 4.3: Plot of Residuals for Logistic Regression Model with Influential Observation Removed

Now, we see that most residual values fall between \((-2, 2)\) (with no values beyond \(\pm 3\)), which indicates a proper model fit.

We notice that when we look at the model summary, some covariates have very large estimated standard errors and are no longer significant, indicating that after removing the influential observations we should re-do our model fitting.

#fit a full model, including the interaction

heart_full2 <- glm(y ~ sbp + tobacco + ldl + adiposity + famhist_f +

typea + obesity + alcohol + age_f + ldl*famhist_f,

family=binomial(link = "logit"), data=heart3)

step(heart_full2, direction = "both", trace = 0) #trace = 0 means don't print##

## Call: glm(formula = y ~ tobacco + ldl + famhist_f + typea + age_f +

## ldl:famhist_f, family = binomial(link = "logit"), data = heart3)

##

## Coefficients:

## (Intercept) tobacco ldl famhist_f1

## -20.65184 0.08771 0.03073 -0.65602

## typea age_f2 age_f3 age_f4

## 0.03471 16.82365 16.97712 17.26763

## age_f5 ldl:famhist_f1

## 17.97553 0.32012

##

## Degrees of Freedom: 459 Total (i.e. Null); 450 Residual

## Null Deviance: 591.9

## Residual Deviance: 446.1 AIC: 466.1Let’s see the summary of the model:

# fit a new model

heart_model6 <- glm(y ~ tobacco + ldl + famhist_f +

typea + age_f + ldl*famhist_f ,

family=binomial(link = "logit"), data=heart3)

summary(heart_model6)##

## Call:

## glm(formula = y ~ tobacco + ldl + famhist_f + typea + age_f +

## ldl * famhist_f, family = binomial(link = "logit"), data = heart3)

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) -20.65184 793.20342 -0.026 0.979229

## tobacco 0.08771 0.02635 3.328 0.000874 ***

## ldl 0.03073 0.07479 0.411 0.681168

## famhist_f1 -0.65602 0.63603 -1.031 0.302337

## typea 0.03471 0.01254 2.768 0.005642 **

## age_f2 16.82365 793.20311 0.021 0.983078

## age_f3 16.97712 793.20310 0.021 0.982924

## age_f4 17.26763 793.20310 0.022 0.982632

## age_f5 17.97553 793.20309 0.023 0.981920

## ldl:famhist_f1 0.32012 0.11794 2.714 0.006643 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 591.85 on 459 degrees of freedom

## Residual deviance: 446.13 on 450 degrees of freedom

## AIC: 466.13

##

## Number of Fisher Scoring iterations: 17Although the stepwise procedure (and an LRT) will tell us otherwise, we should remove the age_f variable due to the large, insignificant estimates. It is likely that for this sample, age_f is not adding any value to our model. We refit this model without age_f:

# fit a new model

heart_model7 <- glm(y ~ tobacco + ldl + famhist_f +

typea + ldl*famhist_f ,

family=binomial(link = "logit"), data=heart3)

summary(heart_model7)##

## Call:

## glm(formula = y ~ tobacco + ldl + famhist_f + typea + ldl * famhist_f,

## family = binomial(link = "logit"), data = heart3)

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) -3.47029 0.74548 -4.655 3.24e-06 ***

## tobacco 0.13821 0.02539 5.444 5.22e-08 ***

## ldl 0.09899 0.07189 1.377 0.16850

## famhist_f1 -0.39576 0.61446 -0.644 0.51953

## typea 0.02383 0.01182 2.016 0.04385 *

## ldl:famhist_f1 0.30096 0.11609 2.592 0.00953 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 591.85 on 459 degrees of freedom

## Residual deviance: 486.83 on 454 degrees of freedom

## AIC: 498.83

##

## Number of Fisher Scoring iterations: 4and see estimates with less extreme values and variances. When we plot the residuals:

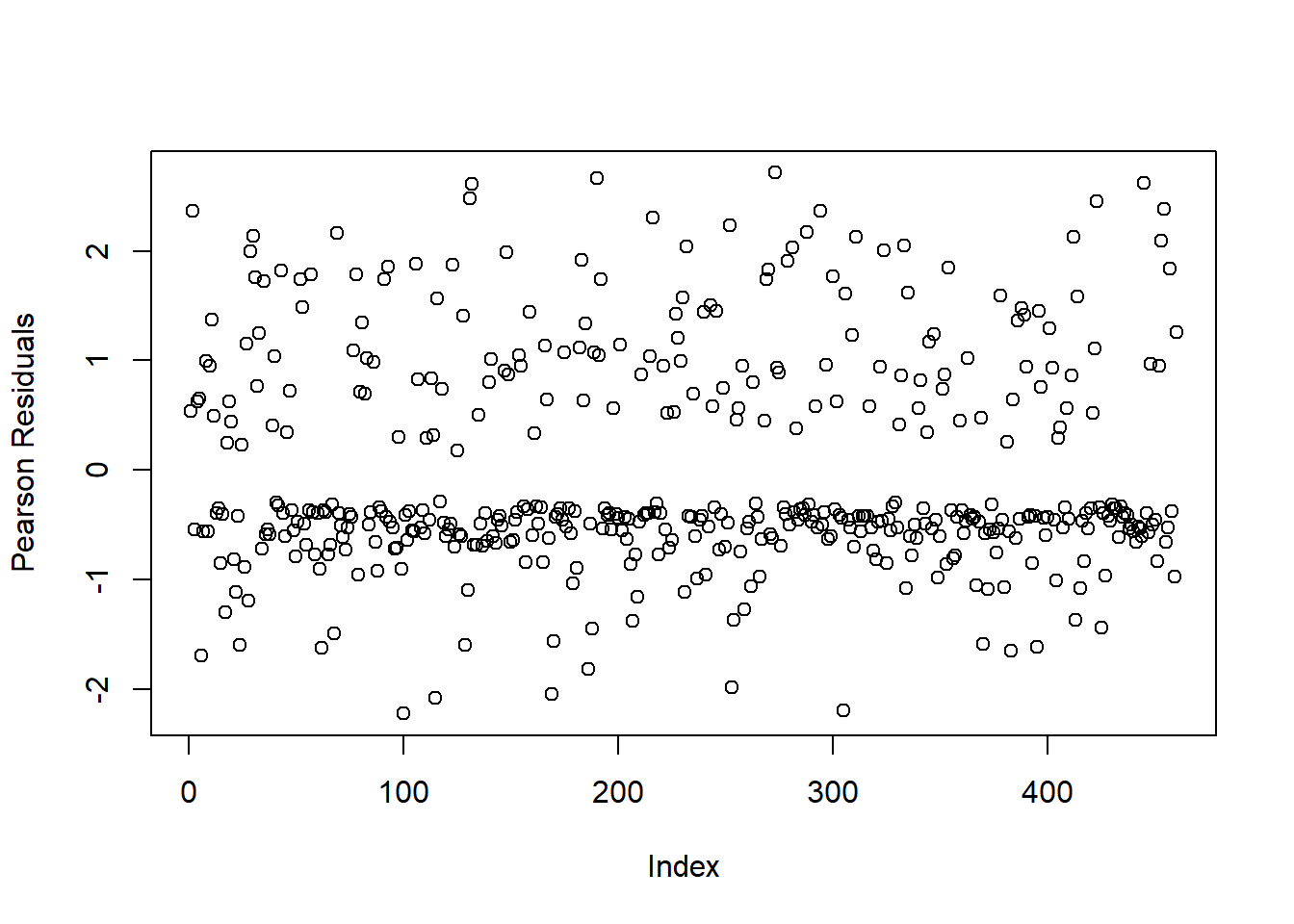

Figure 4.4: Plot of Residuals for Second Regression Modelwith Influential Observation Removed

We see that the residuals are behaving as expected with no extreme values.

We should also check for multicollinearity in our model. We can do so by using the vif() function from the car package. We can do so by the following R code:

# there are multiple vif functions so car:: specifies

# we are using the vif function from the car package

car::vif(heart_model7) ## tobacco ldl famhist_f typea

## 1.034016 1.649904 7.596317 1.013287

## ldl:famhist_f

## 8.480374We see that we only have low-moderate variance inflation factors (VIFs), indicating that multicollinearity is not an issue in this model. We typically are concerned about multicollinearity when VIF values are above 10.

4.6.1.3 Model Interpretation and Hypothesis Testing

Odds Ratios

As previously mentioned, we interpret each coefficients as the log odds ratio of the outcome for a one unit change in the corresponding covariate, controlling for the other covariates in the model. That means, to obtain estimates of the odds ratio, we take the exponential (exp() in R) of the coefficient. The estimates of the standard error for the coefficients (log-odds) will be useful for hypothesis testing and constructing confidence intervals.

Let’s look again at the model we chose from the selection procedure:

##

## Call:

## glm(formula = y ~ tobacco + ldl + famhist_f + typea + ldl * famhist_f,

## family = binomial(link = "logit"), data = heart3)

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) -3.47029 0.74548 -4.655 3.24e-06 ***

## tobacco 0.13821 0.02539 5.444 5.22e-08 ***

## ldl 0.09899 0.07189 1.377 0.16850

## famhist_f1 -0.39576 0.61446 -0.644 0.51953

## typea 0.02383 0.01182 2.016 0.04385 *

## ldl:famhist_f1 0.30096 0.11609 2.592 0.00953 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 591.85 on 459 degrees of freedom

## Residual deviance: 486.83 on 454 degrees of freedom

## AIC: 498.83

##

## Number of Fisher Scoring iterations: 4In mathematical notation, we can write this model as

\[ \begin{aligned} \text{log} \left[\frac{\pi_i}{1-\pi_i} \right]= \beta_0 + &\beta_1x_1 + \beta_2x_2 + \beta_3x_3 + \beta_4x_4 + \beta_5x_2*x_3 \end{aligned} \] where \(\pi_i\) is the probability of having CHD, \(x_1\) is the measurement of cumulative tobacco use, \(x_2\) is the ldl cholesterol measurement, \(x_3\) is an indicator for family history, and \(x_4\) is the type a measurement.

We are provided with the estimates and standard errors of the log odds ratios, but typically want to interpret and communicate our findings on the scale of odd ratios. To obtain an estimate of the odds ratio for a given covariate, we simply exponentiate the coefficient. For example, the tobacco covariate’s estimated coefficient (\(\widehat{\beta_1}\)) is 0.138, meaning we estimate that a one unit increase in tobacco is associated with a log odds ratio of chronic heart disease equal to 0.138, controlling for the other factors in the model. Alternatively, we can say that a one unit increase in tobacco is associated with an odds ratio of chronic heart disease equal to \(\exp(\) 0.138 \()\) = 1.148, controlling for other factors.

Confidence intervals (CIs) are useful in communicating the uncertainty in our estimates and are typically presented along with our estimate. Confidence intervals are calculated on the log odds ratio scale, and then exponentiated to find the confidence interval for the odds ratio. We calculate a 95% confidence interval for a log odds ratio as:

\[

\widehat{\beta} \pm 1.96\times \widehat{se}(\widehat{\beta})

\]

where \(\widehat{se}(\widehat{\beta})\) is the estimated standard error of the regression coefficient. For example, a 95% confidence interval for the log odds ratio of tobacco is

\[

0.138 \pm 1.960 \times 0.025 = (0.089, 0.187).

\]

Then, to find the 95% CI for the odds ratio, we exponentiate both sides of the CI as:

\[

(\exp(0.089), \exp(0.187)) = (1.093, 1.206)

\]

So, we estimate the odds ratio to be 1.148 (95% CI: (1.093, 1.206)) controlling for the other factors in the model. As this 95% CI does not contain the value of OR = 1 in it, we say that we are 95% confident that higher tobacco use is associated with an increased odds of developing CHD.

For the odds ratios and confidence intervals of the regression coefficients individually, one can also call the confint.default() function

logORs <- cbind(coef(heart_model7), confint.default(heart_model7))

colnames(logORs) <- c("logOR", "Lower", "Upper")

logORs## logOR Lower Upper

## (Intercept) -3.47028789 -4.9314011158 -2.00917466

## tobacco 0.13820940 0.0884465653 0.18797224

## ldl 0.09899415 -0.0419048152 0.23989312

## famhist_f1 -0.39575571 -1.6000802163 0.80856880

## typea 0.02382659 0.0006571497 0.04699603

## ldl:famhist_f1 0.30095626 0.0734244303 0.52848808and exponentiate it to get the ORs:

## Odds Ratio Lower Upper

## (Intercept) 0.03110807 0.007216385 0.1340993

## tobacco 1.14821596 1.092475875 1.2068000

## ldl 1.10405984 0.958961055 1.2711133

## famhist_f1 0.67317113 0.201880323 2.2446931

## typea 1.02411271 1.000657366 1.0481178

## ldl:famhist_f1 1.35115024 1.076187206 1.6963656For another example, let’s look at estimating the odds ratio of CHD for a one unit increase in ldl among those with a family history of CHD, controlling for the other factors. For more complex estimates where we may be interested in combinations of covariates, it can be useful to create a table to determine what regression coefficients we want to use.

Recall the model

\[

\begin{aligned}

\text{log} \left[\frac{\pi_i}{1-\pi_i} \right]= \beta_0 + &\beta_1x_1 + \beta_2x_2 + \beta_3x_3 + \beta_4x_4 + \beta_5x_2x_3.

\end{aligned}

\]

and recall \(x_2\) represents ldl and \(x_3\) represents famhist_f.

We wish to estimate the odds ratio of CHD for a one unit increase in ldl, which is the same as looking at \(x_2\) = 1 versus \(x_2 = 0\) (or \(x_2 = 2\) versus \(x_2 = 1\), and so on, however 1 versus 0 is the simplest example). We also are interested in only those with a family history of CHD, represented by \(x_3 = 1\). All of the other covariates are held constant. So, we are comparing

\[ \begin{aligned} \beta_0 + &\beta_1x_1 + \beta_2(1) + \beta_3(1) + \beta_4x_4 + \beta_5(1)(1) \end{aligned} \] to \[ \begin{aligned} \beta_0 + &\beta_1x_1 + \beta_2(0) + \beta_3(1) + \beta_4x_4 + \beta_5(0)(1) \end{aligned} \] If we look at the difference of these equations, we have \[ \begin{aligned} & \beta_0 + \beta_1x_1 + \beta_2(1) + \beta_3(1) + \beta_4x_4 + \beta_5(1)(1)\\ &-\left(\beta_0 + \beta_1x_1 + \beta_2(0) + \beta_3(1) + \beta_4x_4 + \beta_5(0)(1)\right)\\ \hline & \qquad \qquad \qquad \quad \beta_2 \qquad \quad \quad \quad \qquad \quad+\beta_5 \end{aligned} \] which shows that we should estimate and interpret \(\beta_2 + \beta_5\) to answer this question. From the model output, we estimate the log odds ratio as \(\widehat{\beta_2} + \widehat{\beta_5} = 0.099 + 0.301 = 0.400\). Then, the estimated odds ratio is \(\exp(0.400) = 1.492\). So, we estimate that a one unit increase in low density lipoprotein cholesterol is associated with an odds ratio of CHD equal to 1.492, controlling for other factors.

To estimate the confidence interval, we must find the estimated standard error of \(\widehat{\beta_2} + \widehat{\beta_5}\) manually, construct a confidence interval for this quantity (the logOR), and then exponentiate each bound of the confidence interval. To do so in R, we can use the following code:

varcov <- vcov(heart_model7) #get the variance covariance matrix

L <- c(0, 0, 1, 0, 0, 1) #represents \beta_2 + \beta_5 (first place is beta_0)

var_est <- L%*%varcov %*% L

beta_est <- L%*%coef(heart_model7) #vector of coefficients from model

CI <- c(beta_est - 1.96*sqrt(var_est), beta_est + 1.96*sqrt(var_est))

exp(CI) #exponentiate to get CI for OR## [1] 1.247937 1.783199Probabilities

Perhaps we are interested in estimating or predicting the probability of the outcome instead of the odds ratio for given covariates.

Suppose we are interested in the probability that a 25 year old with spb = 150, tobacco = 0, ldl = 6, adiposity = 24, no family history of CHD, typea = 60, obesity = 30, and alcohol = 10. Using the required information, we can obtain a prediction using the predict() function as:

# make a vector of the new information as it would appear in the original

# dataframe (excluding y). Use colnames(heart) to see the order of variables

newsubject <- data.frame(sbp = 150,

tobacco = 0,

ldl = 6,

adiposity = 24,

famhist = 0,

typea = 60,

obesity = 30,

alcohol = 10,

age = 25,

age_f = 2,

famhist_f = 1

)

newsubject$age_f <- as.factor(newsubject$age_f)

newsubject$famhist_f <- as.factor(newsubject$famhist_f)

predict(heart_model7, newdata = newsubject)## 1

## -0.03674586We estimate that \(\frac{\log{\pi}}{1 - \pi} = -0.037\). So, to estimate the probability, we take the expit() of this estimate, or obtain \[ \frac{\exp(-0.037)}{1 + \exp(-0.037)} = 0.491 \] We estimate this hypothetical individual to have a 49.1% probability of having CHD given their covariates.

4.7 Binominal Distributed/Proportional Outcomes (Logistic Regression on Proportions)

Sometimes when evaluating a binary response, we don’t have data for each individual in the study and cannot fit a logistic regression in the usual way. However, if we have the proportion of outcomes for various subgroups in our data set, we can still model the proportion of outcomes using a binomial generalized linear model. That is, we observe \(y_j/m_j\) for each combination \(j = 1, 2, ..., J\) of our variables \(\boldsymbol{x}\) where \(y_j\) is the number of successes/occurrences in a subgroup \(j\) of size \(m_j\). We note that this method is recommended over treating the proportion as a continuous outcome and fitting a linear regression model. This is because the proportions may not be normally distributed, or even continuous for small \(m\).

We model the expected proportions as a function of the covariates of interest using a binomial regression model (logistic regression). We can fit a generalized linear model two ways: we can either model the response as a pair where the response is cbind(y, m - y) or model the response as y/m where we set weights = m as a parameter in the glm() function. In both settings, we let family = binomial() and specify a link function.

The following example shows the use of the binomial regression model, and explains how we can fit the model in two different ways.

4.7.1 Example

We will be looking at a data set involving the proportions of children who have reached menarche at different ages. The data set contains the average age of the group (which are reasonably homogeneous) (Age), the total number of children in the group (Total), and the number of children in the group who have reached menarche (Menarche). The goal of this analysis is to see quantify the relationship between age and menarche onset. We first load the menarche data set from the MASS package:

# load the data from MASS package

data("menarche")

# look at first 6 observations of the data

head(menarche)## Age Total Menarche

## 1 9.21 376 0

## 2 10.21 200 0

## 3 10.58 93 0

## 4 10.83 120 2

## 5 11.08 90 2

## 6 11.33 88 5We see that for different age groups, we have different sizes of the number of individuals in that group (Total), and the corresponding number of children who have reached menarche (Menarche). The proportion of people who have reached menarche in each age group is then Menarche/Total.

4.7.1.1 Model Fitting

Recall that we can fit a model two ways: fitting the outcome as cbind(y, m - y) or fitting the outcome as y/m where we set weights = m as a parameter in the glm() function. We will show that these models produce the same results. We will fit both models using the canonical logit link. First, we fit the model using the paired response:

menarche_fit1 <- glm(cbind(Menarche, Total - Menarche) ~ Age,

data = menarche,

family = binomial(link = "logit"))

summary(menarche_fit1)##

## Call:

## glm(formula = cbind(Menarche, Total - Menarche) ~ Age, family = binomial(link = "logit"),

## data = menarche)

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) -21.22639 0.77068 -27.54 <2e-16 ***

## Age 1.63197 0.05895 27.68 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 3693.884 on 24 degrees of freedom

## Residual deviance: 26.703 on 23 degrees of freedom

## AIC: 114.76

##

## Number of Fisher Scoring iterations: 4Next, we fit the model using the proportions and specifying the weights:

menarche_fit2 <- glm(Menarche/Total ~ Age,

data = menarche,

weights = Total,

family = binomial(link = "logit"))

summary(menarche_fit2)##

## Call:

## glm(formula = Menarche/Total ~ Age, family = binomial(link = "logit"),

## data = menarche, weights = Total)

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) -21.22639 0.77068 -27.54 <2e-16 ***

## Age 1.63197 0.05895 27.68 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 3693.884 on 24 degrees of freedom

## Residual deviance: 26.703 on 23 degrees of freedom

## AIC: 114.76

##

## Number of Fisher Scoring iterations: 4We see that these models are equivalent.

4.7.1.2 Model Diagnostics

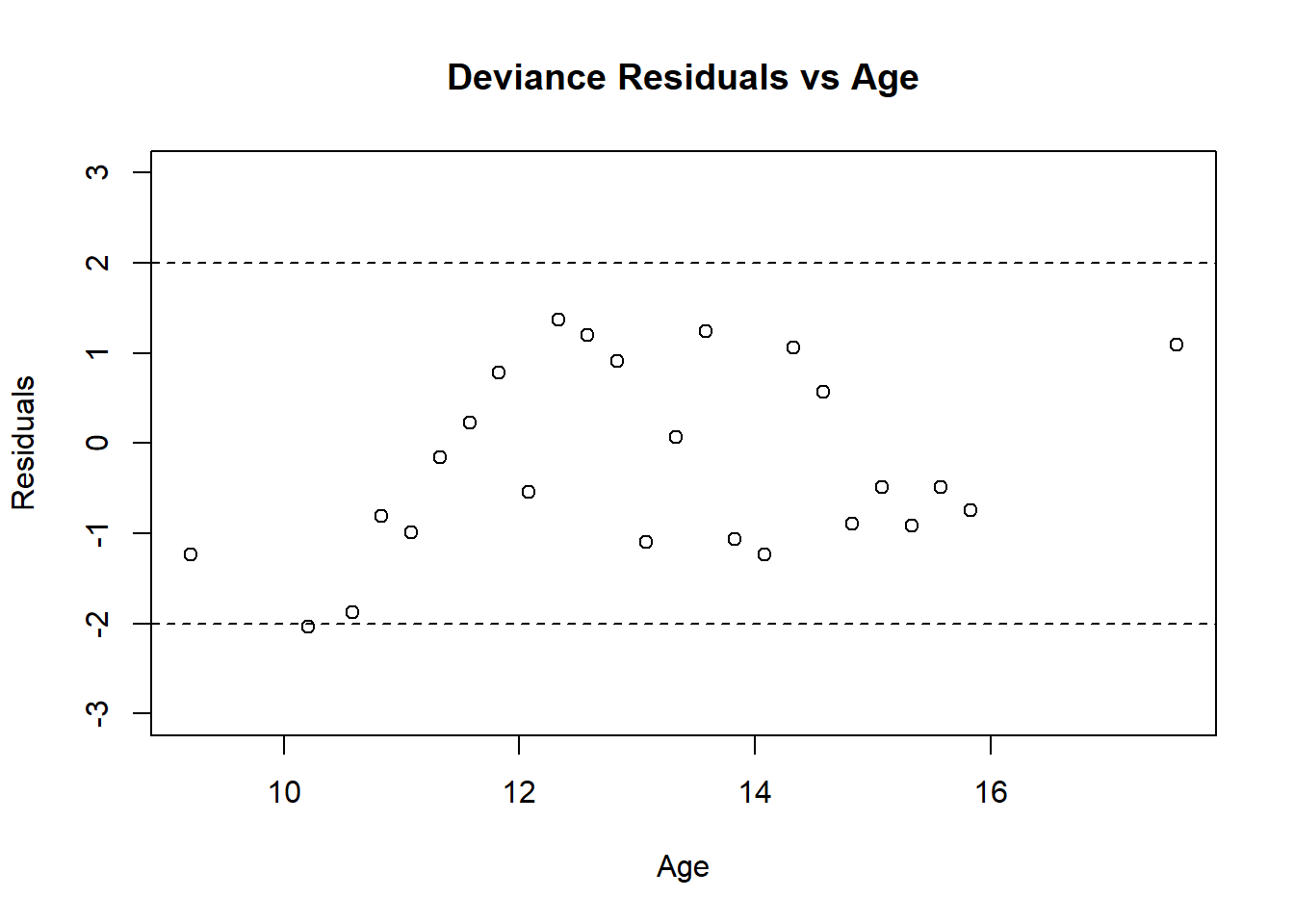

We can look at the model fit by evaluating the residual and fitted values:

# first plot the residual vs dose

resid_menarche <- residuals.glm(menarche_fit1, "deviance")

plot(menarche$Age, resid_menarche, ylim = c(-3, 3),

main = "Deviance Residuals vs Age",

ylab = "Residuals",

xlab = "Age")

abline(h = -2, lty = 2) # add dotted lines at 2 and -2

abline(h = 2, lty = 2)

Figure 4.5: Residual plot for Menarche GLM

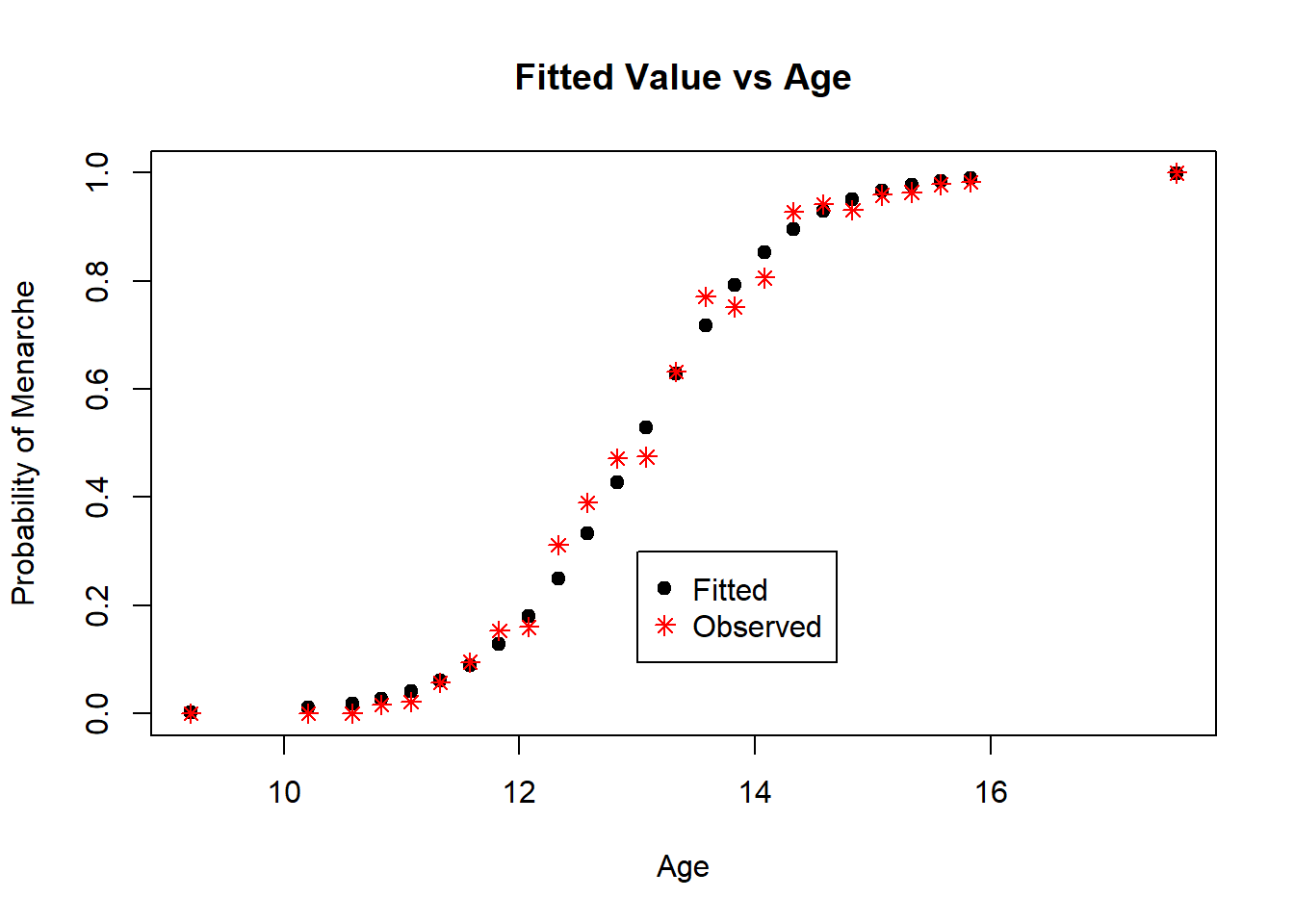

# then plot the fitted versus dose

fitted_menarche <- menarche_fit1$fitted.values

plot(menarche$Age, fitted_menarche, ylim = c(0, 1),

main = "Fitted Value vs Age",

ylab = "Probability of Menarche",

xlab = "Age",

pch = 19)

points(menarche$Age, menarche$Menarche/menarche$Total, col = "red", pch = 8)

legend(13, 0.3, legend = c("Fitted", "Observed"), col = c("black", "red"), pch = c(19, 8))

Figure 4.6: Diagnostic Plot for Probit Model: Fitted Values

The residuals in Figure 4.5 are mostly between -2 and 2, and show no signs of a poor fit. The plot of the fitted and observed values in Figure 4.6 are similar, indicating good prediction for the observations we fit our model on. Overall, this model appears to be a good fit for the data.

4.7.1.3 Model Interpretation

From (either) model output, we see that age is significantly associated with menarche. As we used the logit link, the interpretation of our parameters are log odds ratios. As such, we can interpret the model output as follows:

- \(\beta_0\): At age = 0, the log odds of menarche is estimated to be -21.226 (the odds is estimated to be \(\exp(-21.226)\) = 0.000). This makes sense in the context of our study.

- \(\beta_1\): For a one unit increase in the average age, we estimate the log odds of menarche to be 1.632 times higher (the odds ratio is estimated to be \(\exp(1.632)\) = 5.114 times higher). Confidence intervals can be calculated as in Section 4.6.

From these results, we conclude that age is significantly associated with menarche onset.

We can also estimate the probability of menarche onset for certain ages. For example, let’s estimate the probability of menarche for a 13 year old child based on this model. Our model is \[ \log\left(\frac{\widehat{\pi}_i}{1 - \widehat{\pi}}_i\right) = -21.226 + 1.632*\text{Age}_i \] which we can re-write as \[ \widehat{\pi}_i = \frac{\exp(-21.226 + 1.632*\text{Age}_i)}{1 + \exp(-21.226 + 1.632*\text{Age}_i)}. \] Thus, at age 13, we can estimate the probability of menarche as \[ \widehat{\pi}_i = \frac{\exp(-21.226 + 1.632*13)}{1 + \exp(-21.226 + 1.632*13)}. \] We can calculate this by hand, or use the following commands in R:

# grab the coefficients of the model

est_beta <- menarche_fit1$coefficients #beta[1] is the intercept,

# beta[2] is the coefficient on age

# estimate the probability

est_prob <- exp(est_beta[1] + est_beta[2]*13)/(1 + exp(est_beta[1] + est_beta[2]*13))

print(paste("Estimated probability:", round(est_prob,3))) #print it out, rounded## [1] "Estimated probability: 0.497"Thus, for a 13 year old child, we estimate the probability of menarche to be 49.7%.

4.7.2 Dose-response Models

Suppose we want to quantify a dose-response relationship between a stimulus (dose) and a particular outcome (response). We typically see dose-response relationships in bioassay experiments where we expose groups of living subjects to varying doses of a toxin and determine how many deaths or other binary health outcomes there are within a given time period. Given the concentration of the toxin, we calculate the dose as

\[ x = \text{dose} = \log(\text{concentration}) \] We assume that for each subject in the bioassay study there is a tolerance or threshold dosage where a response will always occur. This value can vary from individual to individual and can be described by a statistical distribution. For each group \(j = 1, 2, \dots, J\), we let \(m_j\) be the total number of subjects in group \(j\), \(x_j\) be the dose applied to all subjects in group \(i\), and \(y_i\) be the number of subjects that responded in group \(j\).

We assume that \(Y_j\) follows a binomial distribution with \(n\) = \(m_j\) and unknown probability of response \(\pi_j\). We can model this using a GLM as \[ g(\pi) = \beta_0 + \beta_1x \] where \(g()\) is a link function.

Choices of \(g()\) include the probit, logit, and complimentary log-log (cloglog). The “best” link function depends on the underlying distribution of the probability \(\pi\). After describing the link functions, we will show in an example in Section 4.7.2.1 that includes how to choose the link function from the data.

Probit Link

If \(\pi(x)\) is normally distributed, use probit link \(g(\pi) = \Phi^{-1}(\pi)\) where \(\Phi\) is the standard normal distribution CDF (cumulative distribution function)

Using this link function, we do not have the interpretation of odds ratios as we are not using the logistic link function. We can re-write the relationship between \(\pi\) and the covariate \(x\) through the link function as \[ \pi(x) = g^{-1}(\beta_0 + \beta_1x) = \Phi\left( \frac{x - \mu}{\sigma} \right) \] where \(\mu\) is the mean and \(\sigma\) is the standard deviation of the tolerance distribution (here we subtract the mean and divide by the standard deviation to create a standard normal distribution).

We may be the median lethal/effective dose at which 50% of the population has a response (\(\delta_{0.5}\)), which is calculated as \[ \delta_{0.5} = \frac{-\beta_0}{\beta_1} \]

which can be calculated using the GLM model output. We can also obtain expressions for the 100\(p\)th percentile of the tolerance distribution (\(\delta_p\)) as \[ \delta_p = \frac{\Phi^{-1}(p) - \beta_0}{\beta_1} \] where \(\Phi^{-1}()\) is the inverse of the standard normal CDF.

Logit Link

If we assume the probability has the form \(\pi(x) = \frac{\exp(\beta_0 + \beta_1x)}{1 + \exp(\beta_0 + \beta_1x)}\), then we can use the logit link \(g(\pi) = \log\left(\frac{\pi}{1 - \pi}\right)\). We interpret the parameters \(\beta_0\) and \(\beta_1\) as log odds/log odds ratios as in our “usual” logistic regression. That is, \(\beta_0\) is the log odds of response for a dose of zero and \(\beta_1\) is the log odds ratio for response associated with a one unit increase in the dose. To find odds/odds ratios, we exponentiate the coefficients.

The median lethal/effective dose is the same as the probit link, which is \[ \delta_{0.5} = \frac{-\beta_0}{\beta_1}. \] It is also possible to obtain expressions for any percentile of the tolerance distribution. That is, the 100\(p\)th percentile of the tolerance distribution for \((0 < p < 1)\) can be found by solving \[ \log{\frac{p}{1-p}} = \beta_0 + \beta_1\delta_p \] where \(\delta_p\) is the dosage we’d like to solve for.

Complimentary Log-log Link

If we assume \(\pi(x) = 1 - \exp(-\exp(\beta_0 + \beta_1x))\) (extreme value distribution), we can use the complementary log-log (cloglog) link \(g(\pi) = \log(-\log(1 - \pi))\)

The interpretation of the parameters when using a complimentary log-log link function is not as “nice” as when using the logit or probit links, however we can still obtain an expression for the median lethal/effective dose, which is \[ \delta_{0.5} = \frac{\log(-\log(1 - 0.5)) - \beta_0}{\beta_1}. \]

4.7.2.1 Example

We use data from a study by (Milesi, Pocquet, and Labbé 2013) to evaluate the dose-response relationship of insecticide dosages and insect fatalities. We will focus on one particular strain of insecticide for the first replicate of the study.

To read in this data, which is located in the “data” folder, we perform the following:

# read in the data

insecticide <- read.table("data/insectdoseresponse.txt", header = T)

# change colnames to english

colnames(insecticide) <- c("insecticide", "strain", "dose", "m", "deaths",

"replicate", "date", "color")

# view first 6 observations

head(insecticide)## insecticide strain dose m deaths replicate date color

## 1 temephos KIS-ref 0.000 100 1 1 26/01/11 1

## 2 temephos KIS-ref 0.002 97 47 1 26/01/11 1

## 3 temephos KIS-ref 0.003 96 68 1 26/01/11 1

## 4 temephos KIS-ref 0.004 98 89 1 26/01/11 1

## 5 temephos KIS-ref 0.005 95 90 1 26/01/11 1

## 6 temephos KIS-ref 0.007 99 97 1 26/01/11 1We are only interested in a subset of this data for the purposes of this example. We are only interested in the first replication of the KIS-ref strain. As such, we subset the data by:

# only keep rows where souche == KIS-ref and replicate = 1

insecticide <- insecticide[insecticide$strain == "KIS-ref" & insecticide$replicate == 1, ]

insecticide## insecticide strain dose m deaths replicate date color

## 1 temephos KIS-ref 0.000 100 1 1 26/01/11 1

## 2 temephos KIS-ref 0.002 97 47 1 26/01/11 1

## 3 temephos KIS-ref 0.003 96 68 1 26/01/11 1

## 4 temephos KIS-ref 0.004 98 89 1 26/01/11 1

## 5 temephos KIS-ref 0.005 95 90 1 26/01/11 1

## 6 temephos KIS-ref 0.007 99 97 1 26/01/11 1

## 7 temephos KIS-ref 0.010 99 99 1 26/01/11 1To fit a dose-response GLM to this data, we need to have the total number of insects in each group, the dose (log(concentration)), and construct the appropriate paired response variable for the regression (y, m - y). In this data set, we already have the dose (dose) and number of events (deaths) and group totals (m). Prior to fitting the glm, we just need to construct the response variable. To do so in R, we perform the following:

# calculate response variable

insecticide$response <- cbind(insecticide$deaths, insecticide$m - insecticide$deaths)

# look at the data

insecticide## insecticide strain dose m deaths replicate date color

## 1 temephos KIS-ref 0.000 100 1 1 26/01/11 1

## 2 temephos KIS-ref 0.002 97 47 1 26/01/11 1

## 3 temephos KIS-ref 0.003 96 68 1 26/01/11 1

## 4 temephos KIS-ref 0.004 98 89 1 26/01/11 1

## 5 temephos KIS-ref 0.005 95 90 1 26/01/11 1

## 6 temephos KIS-ref 0.007 99 97 1 26/01/11 1

## 7 temephos KIS-ref 0.010 99 99 1 26/01/11 1

## response.1 response.2

## 1 1 99

## 2 47 50

## 3 68 28

## 4 89 9

## 5 90 5

## 6 97 2

## 7 99 0We will fit three models, one with each of the previously discussed link functions, using the glm() function. We start with the probit link:

# fit the glm using link = "probit"

insecticide_fit_probit <- glm(response ~ dose,

family = binomial(link = "probit"), data = insecticide)

# look at the model output

summary(insecticide_fit_probit)##

## Call:

## glm(formula = response ~ dose, family = binomial(link = "probit"),

## data = insecticide)

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) -1.5102 0.1508 -10.02 <2e-16 ***

## dose 658.3608 49.5291 13.29 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 433.593 on 6 degrees of freedom

## Residual deviance: 19.862 on 5 degrees of freedom

## AIC: 45.692

##

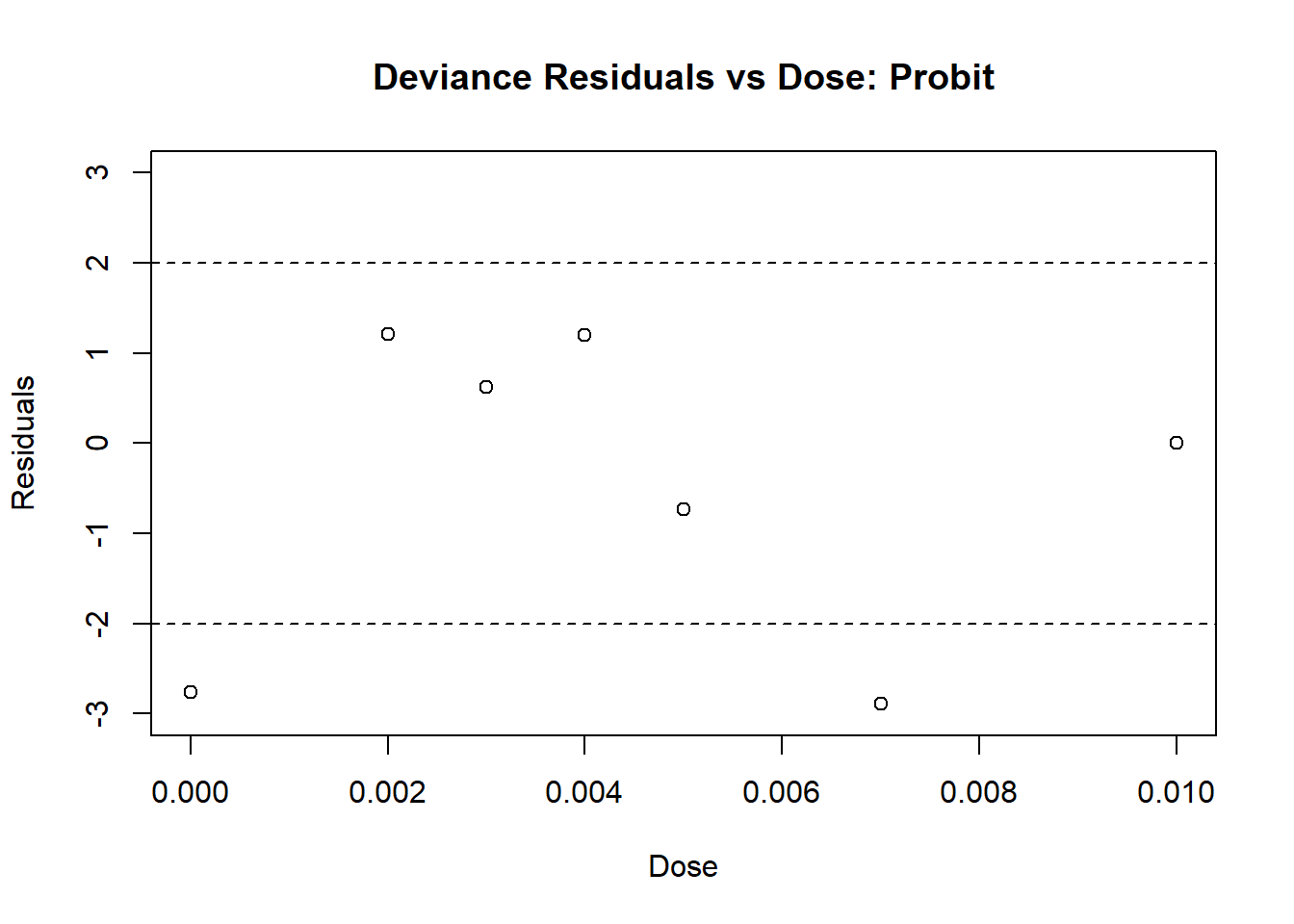

## Number of Fisher Scoring iterations: 6# assess the residuals

# first plot the residual vs dose

resid_probit <- residuals.glm(insecticide_fit_probit, "deviance")

plot(insecticide$dose, resid_probit, ylim = c(-3, 3),

main = "Deviance Residuals vs Dose: Probit",

ylab = "Residuals",

xlab = "Dose")

abline(h = -2, lty = 2) # add dotted lines at 2 and -2

abline(h = 2, lty = 2)

Figure 4.7: Diagnostic Plot for Probit Model: Residuals

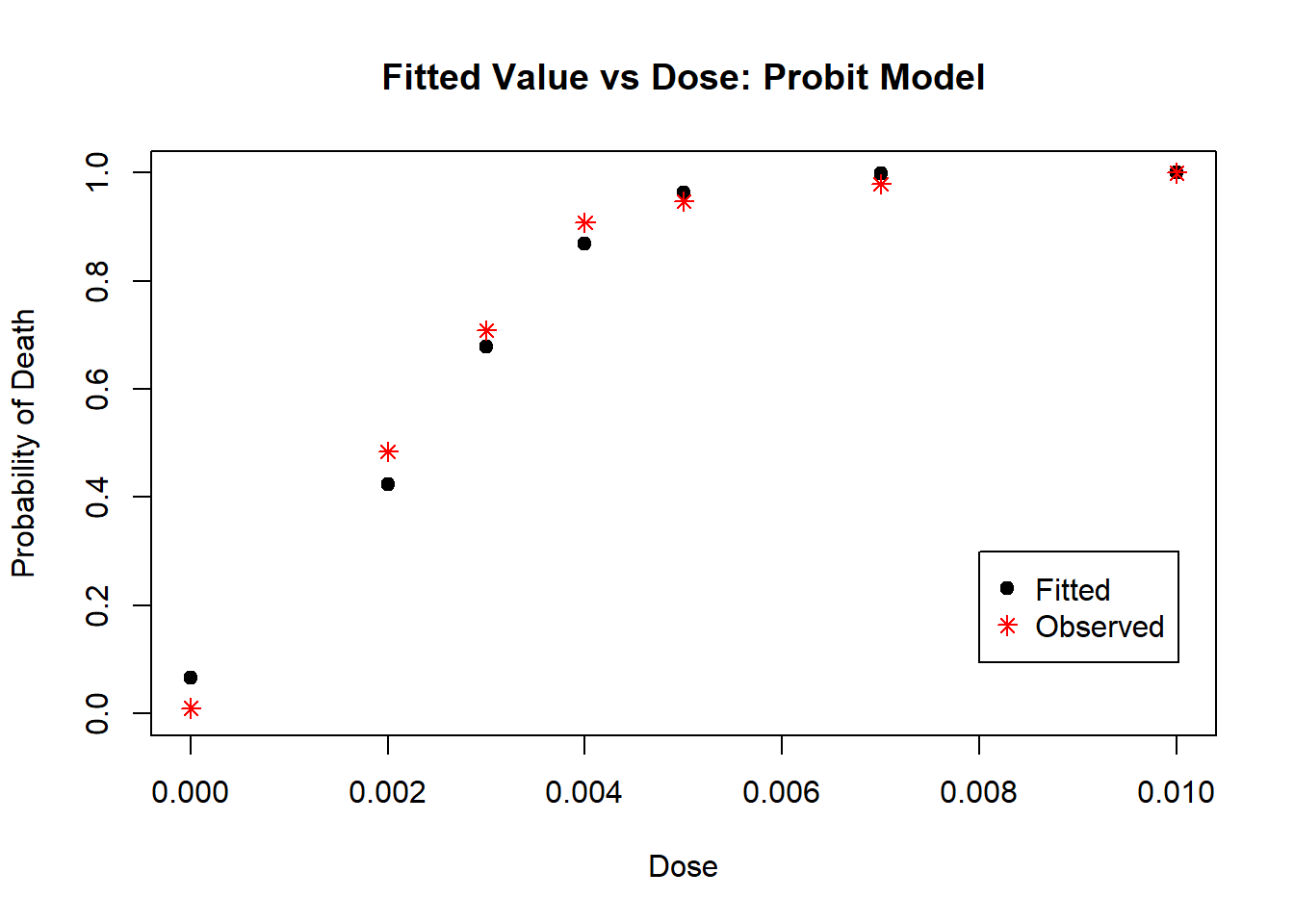

# then plot the fitted versus dose

fitted_probit <- insecticide_fit_probit$fitted.values

plot(insecticide$dose, fitted_probit, ylim = c(0, 1),

main = "Fitted Value vs Dose: Probit Model",

ylab = "Probability of Death",

xlab = "Dose",

pch = 19)

points(insecticide$dose, insecticide$deaths/insecticide$m,

col = "red", pch = 8)

legend(0.008, 0.3, legend = c("Fitted", "Observed"),

col = c("black", "red"), pch = c(19, 8))

Figure 4.8: Diagnostic Plot for Probit Model: Fitted Values

From Plots 4.7 we see that most residuals fall within (-2, 2), which we would expect for a model with an adequate fit. From Plot 4.8, the fitted values mostly agree with the observed values, with no large deviations.

Next, we can fit the model using a logit link:

# fit the glm using link = "logit"

insecticide_fit_logit <- glm(response ~ dose,

family = binomial(link = "logit"), data = insecticide)

# look at the model output

summary(insecticide_fit_logit)##

## Call:

## glm(formula = response ~ dose, family = binomial(link = "logit"),

## data = insecticide)

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) -2.7636 0.3006 -9.195 <2e-16 ***

## dose 1215.5565 103.6385 11.729 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 433.593 on 6 degrees of freedom

## Residual deviance: 13.457 on 5 degrees of freedom

## AIC: 39.287

##

## Number of Fisher Scoring iterations: 5# assess the residuals

# first plot the residual vs dose

resid_logit <- residuals.glm(insecticide_fit_logit, "deviance")

plot(insecticide$dose, resid_logit, ylim = c(-3, 3),

main = "Deviance Residuals vs Dose: logit",

ylab = "Residuals",

xlab = "Dose")

abline(h = -2, lty = 2) # add dotted lines at 2 and -2

abline(h = 2, lty = 2)

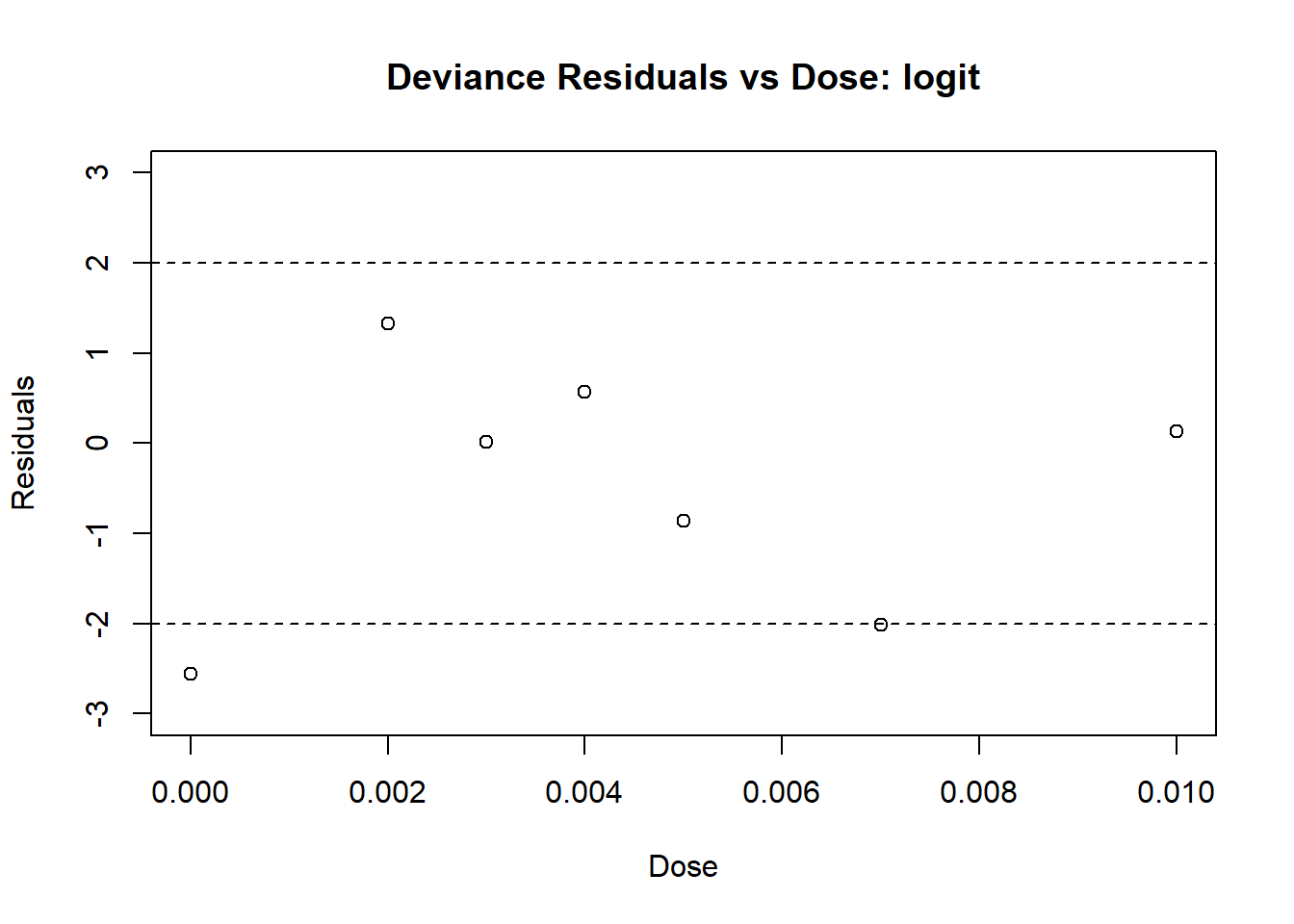

Figure 4.9: Diagnostic Plot for logit Model: Residuals

# then plot the fitted versus dose

fitted_logit <- insecticide_fit_logit$fitted.values

plot(insecticide$dose, fitted_logit, ylim = c(0, 1),

main = "Fitted Value vs Dose: logit Model",

ylab = "Probability of Death",

xlab = "Dose",

pch = 19)

points(insecticide$dose, insecticide$deaths/insecticide$m,

col = "red", pch = 8)

legend(0.008, 0.3, legend = c("Fitted", "Observed"),

col = c("black", "red"), pch = c(19, 8))

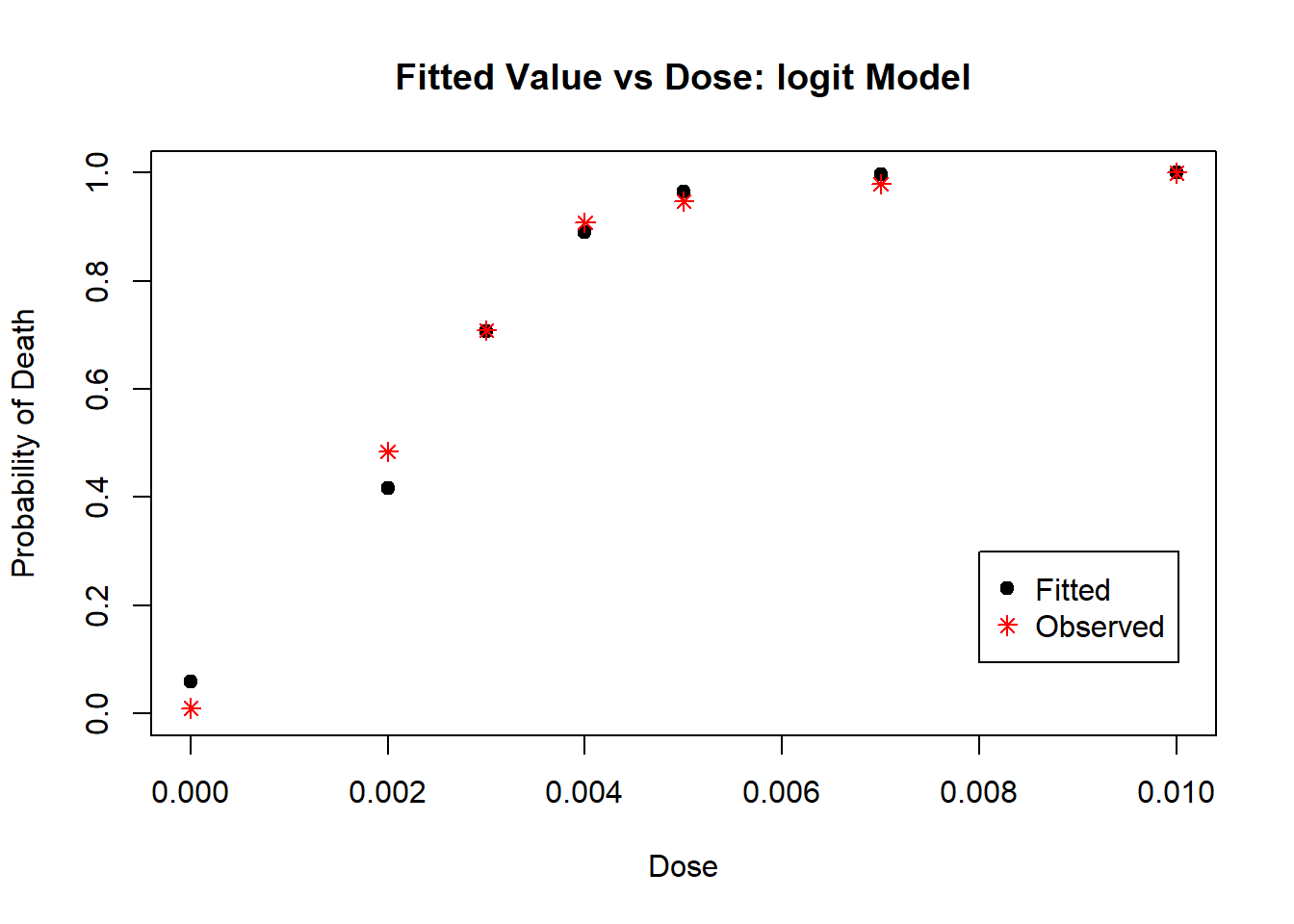

Figure 4.10: Diagnostic Plot for logit Model: Fitted Values

From Plots 4.9 we see that most residuals fall within (-2, 2), which we would expect for a model with an adequate fit. From Plot 4.10, the fitted values mostly agree with the observed values, with no large deviations. This model is very similar to the one with the probit link model.

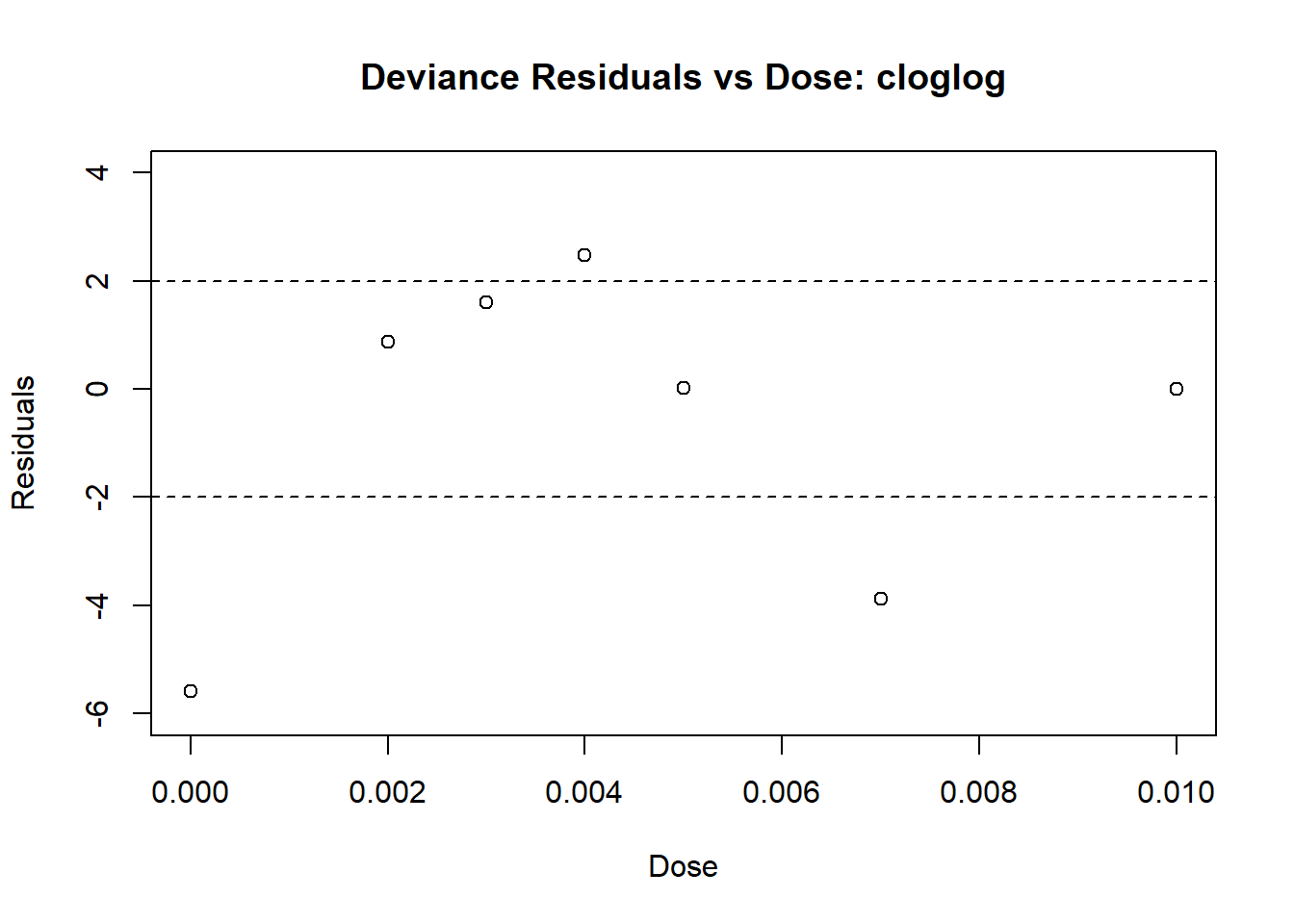

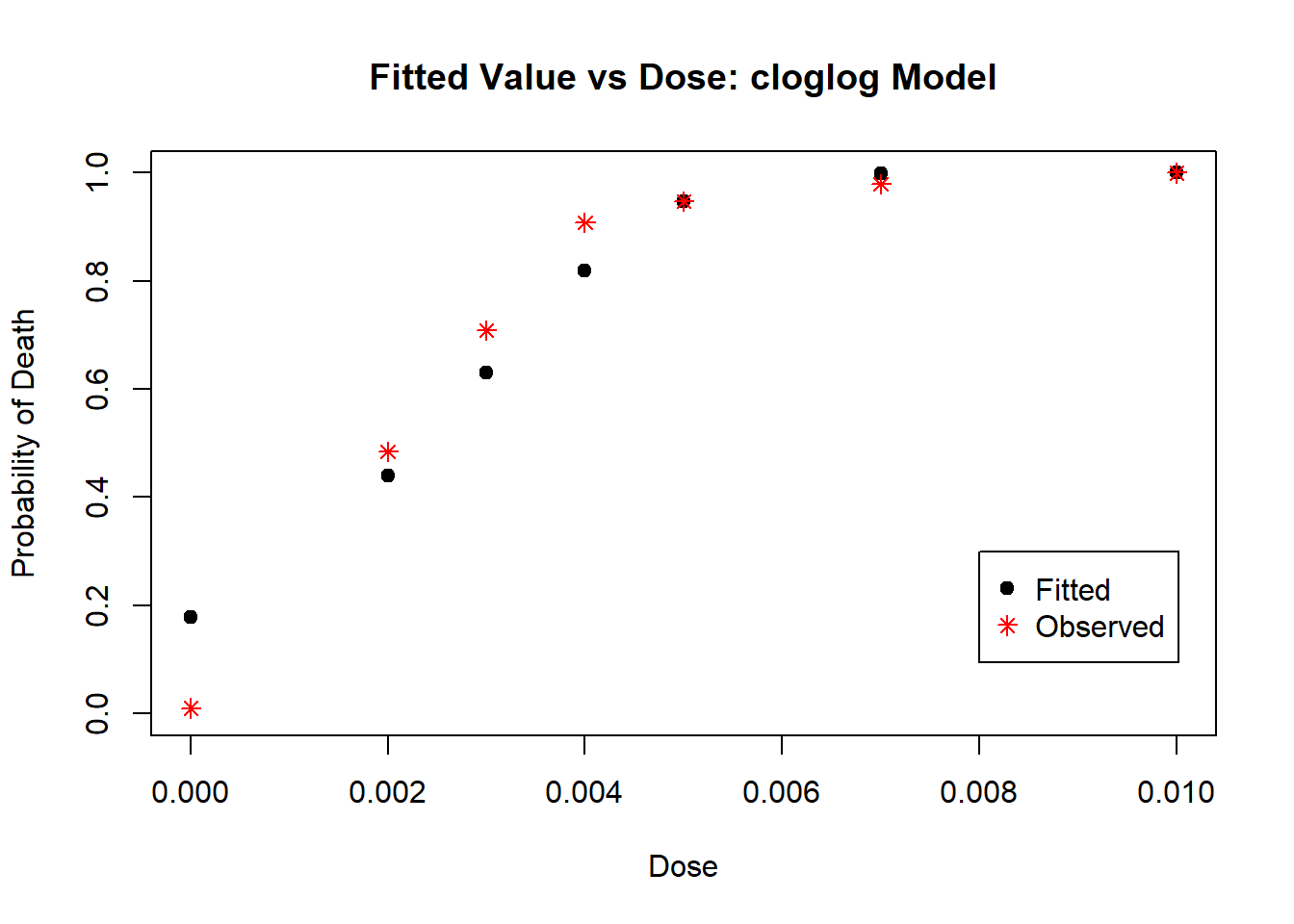

Next, we fit the model using the complimentary log-log link:

# fit the glm using link = "cloglog"

insecticide_fit_cloglog <- glm(response ~ dose,

family = binomial(link = "cloglog"), data = insecticide)## Warning: glm.fit: fitted probabilities numerically 0 or 1 occurred##

## Call:

## glm(formula = response ~ dose, family = binomial(link = "cloglog"),

## data = insecticide)

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

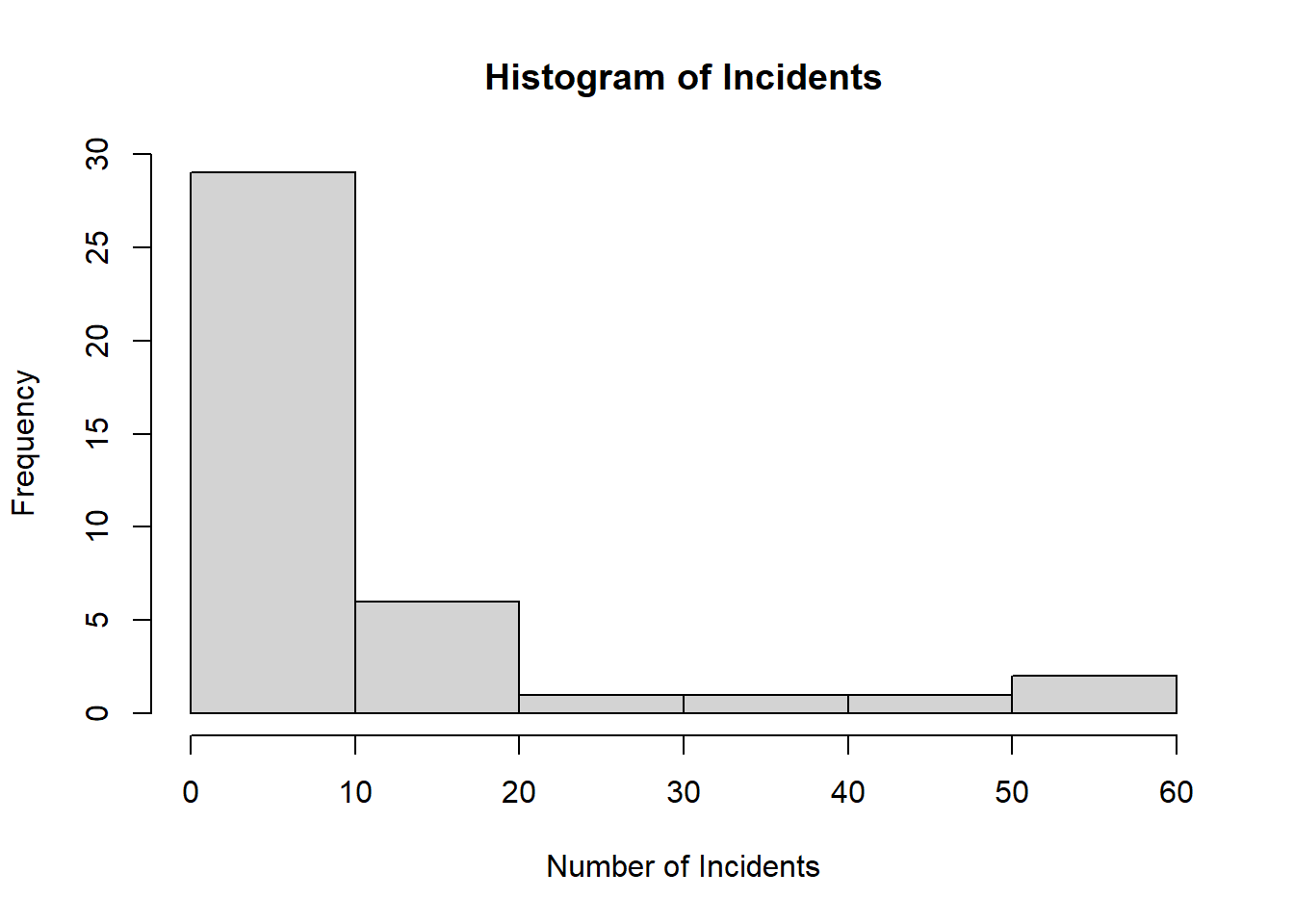

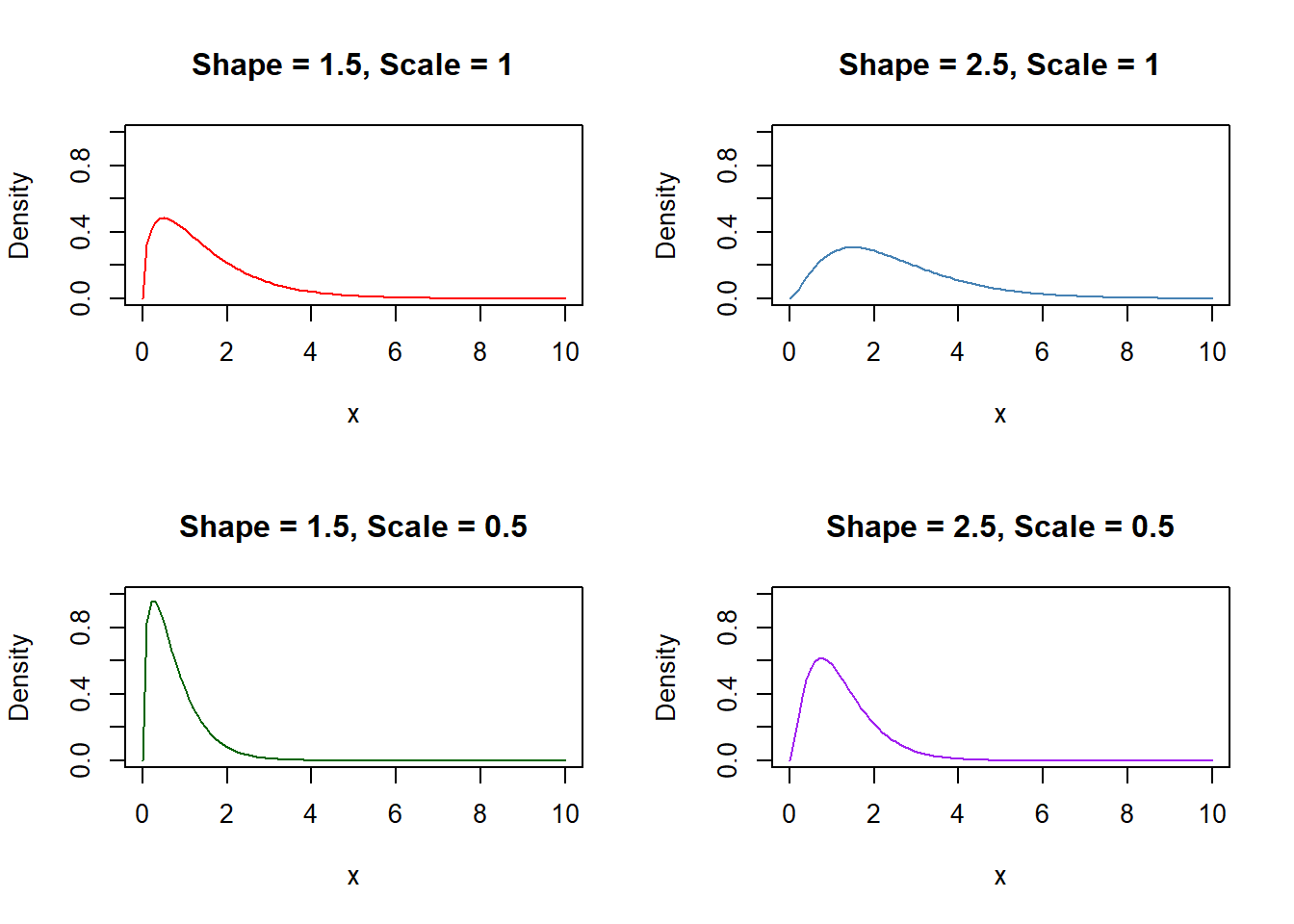

## (Intercept) -1.6246 0.1686 -9.633 <2e-16 ***